It’s possible that executing your Python code in the Jupyter notebook caused you to experience this strange problem of the “iopub data rate exceeded” error. We will be seeing why this error occurs, what is the source of this error, and how we can pull ourselves out of this error.

How does the “iopub data rate exceeded” error occurs

The “iopub data rate exceeded” error normally occurs when you are trying to send too much data to the Jupyter server for it to handle, i.e., either we are attempting to print a large amount of data to the notebook or we are attempting to display a large image in the notebook.

This is common when attempting to show massive quantities of data with Python visualization tools such as Plotly, matplotlib, and seaborn or if we use Jupyter to manage massive volumes of data when there is a large data exchange and so on.

The default setting of Jupyter is not set to manage the enormous amount of data, resulting in this error notice.

E.g., The below code may produce the error “iopub data rate exceeded”.

import matplotlib.pyplot as plt

import numpy as np

frequency = 100

def sine(x, phase=0, frequency=frequency):

return np.sin((frequency * x + phase))

dist = np.linspace(-0.5,0.5,1024)

x,y = np.meshgrid(dist, dist)

grid = (x**2+y**2)

testpattern = sine(grid, frequency=frequency)

methods = [None, 'none', 'nearest', 'bilinear', 'bicubic', 'spline16',

'spline36', 'hanning', 'hamming', 'hermite', 'kaiser', 'quadric',

'catrom', 'gaussian', 'bessel', 'mitchell', 'sinc', 'lanczos']

fig, axes = plt.subplots(3, 6, figsize=(384, 192))

fig.subplots_adjust(hspace=0.3, wspace=0.05)

for ax, interp_method in zip(axes.flat, methods):

ax.imshow(testpattern , interpolation=interp_method, cmap='gray')

ax.set_title(interp_method)

plt.show()

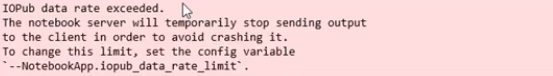

The error that we get looks as in the below image:-

Why does this error occur

This error occurs as it acts as a safety mechanism while preventing jupyter from crashing.

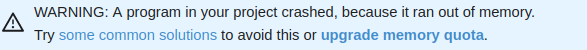

It generally shows the below image when it crashes. We can simply upgrade the memory quota ( which we will be seeing in the below sections), to avoid this crashing of the program.

Source of the error

The problem of the “iopub data rate exceeded” error occurred because our software or code exceeded the maximum allowable IO data transfer rate.

We may simply get around that by altering a few configuration items, like we need to set the below-given config variable to change the limit of allowable IO data transfer rate.

--NotebookApp.iopub_data_rate_limit

“iopub data rate exceeded” error in Mac/Windows/Linux

We first need to create a Jupyter notebook configuration using the command:-

jupyter notebook --generate-configThis will result in creating a new configuration file with all of the default attributes commented out. The process is similar for Windows/Mac or Linux systems. After that, find the generated configuration file and follow the below steps.

Update the NotebookApp.iopub_data_rate_limitFloat value(This attribute specifies the maximum rate (in bytes/sec) at which stream output can be transmitted on iopub before it is restricted) from the default of 1000000 to any value greater than the default like 10000000, and comment it out.

Another option can be to open the Jupyter notebook with a command-line argument as given below. You can enter any numerical value in place of the number used below in the command.

jupyter notebook --NotebookApp.iopub_data_rate_limit=10000000000It will surely solve the error of the “iopub data rate exceeded” error.

Resolving this error without changing the data limit

To solve this problem without changing the data rate limit, you can try one of the following options:

- Reduce the size of the data that you are trying to display or generate. For example, you can resize an image to be smaller before displaying it, or you can limit the number of rows that you are printing in a cell. Like we can compress an image and then view it.

- Use a library that can handle large amounts of data more efficiently. For example, the “datashader” library is designed to handle huge datasets and can be used to create visualizations that are less demanding on the Jupyter server.

- Use a different tool to display or process the data. For example, if you are trying to display a very large image, you may be able to use an image viewer outside of Jupyter to view it more efficiently. If we are trying to access the image directly from the internet into the Jupyter server, then we can first download it and then view it in some other picture viewer.

Resolving errors in colab

We get this error when we try to print a huge chunk of data in the console, only way out is to comment out the print line, download the data, and read it in bits for further computation.

Getting rid of the error in JupyterLab

As with colab, we can comment out the print line which is causing the data limit to be breached. And can carry on with the computation in bits and pieces.

Solving the “iopub data rate exceeded” error in the anaconda prompt

- Find the anaconda prompt by typing ‘Anaconda Prompt’ in the search bar.

- Enter ‘jupyter notebook -generate-config’ in the prompt window and press return.

- Locate the file just made on the hard disc.

- Locate the line that says #c.NotebookApp.iopub data rate limit = 1000000′ in a text editor.

- Remove the comment and, then, to the string of zeros, add a 0 or any big value of your liking.

- Save this file, then restart Jupyter Notebook, Anaconda, and everything else linked to it. Hopefully, the mistake will not occur again.

FAQs

What is Jupyter Notebook?

It is a free and open-source online application for making and collaborating codes, equations, and visualizations.

What languages can we use in Jupyter notebook?

We can code in over 40 programming languages in Jupyter notebook, like Python, R, and Scala.

What is a jupyter notebook kernel?

A kernel is a runtime environment that allows the programming languages of the Jupyter Notebook application to be executed.

How to change the limit of the Jupyter Notebook?

Simply opening the Jupyter Notebook with the following command helps us in changing its limit.

`jupyter notebook --NotebookApp.iopub_data_rate_limit=Value_of_liking`

Trending Now

-

Efficiently Organize Your Data with Python Trie

●May 2, 2023

-

[Fixed] modulenotfounderror: no module named ‘_bz2

by Namrata Gulati●May 2, 2023

-

[Fixed] Cannot Set verify_mode to cert_none When check_hostname is Enabled

by Namrata Gulati●May 2, 2023

-

Prevent Errors with Python deque Empty Handling

by Namrata Gulati●May 2, 2023

I get the same error message in JupyterLab 3.6.3 (on Python 3.10.0

on Windows 10) when I use the help() on Pandas.

Although the help() function does not use print explicitly, the pandas documentation is 100s of pages long, so probably exceeds JupyterLab’s or Jupyter Notebook capacity to display it.

The return type of the help() function and it is a NoneType so it likely uses the print() function internally or at least the str() attribute, which is the equivalent result as the print() function.

$ import pandas

$ help(pandas)

IOPub data rate exceeded. The Jupyter server will temporarily stop

sending output to the client in order to avoid crashing it. To change

this limit, set the config variable

--ServerApp.iopub_data_rate_limit.

I get the same error message from Jupyter Classic NB started from the Help menu of JupyterLab 3.6.3.

IOPub data rate exceeded. The Jupyter server will temporarily stop

sending output to the client in order to avoid crashing it. To change

this limit, set the config variable

--ServerApp.iopub_data_rate_limit.Current values: ServerApp.iopub_data_rate_limit=1000000.0 (bytes/sec)

ServerApp.rate_limit_window=3.0 (secs)

Server Information:

You are using Jupyter NbClassic.

Jupyter Server v2.5.0

Jupyter nbclassic v0.5.3 (started using the «Launch Jupyter Classic Notebook dropdown menu within JupyterLab «Help» menu)

The solution above ( jupyter notebook --NotebookApp.iopub_data_rate_limit=1.0e10 ) eliminated the help() function output error message. It gives me the full pandas help documentation within the JupyterLab NB output cell.

Thanks for the answers.

You might have received this weird issue from the Jupyter notebook, possibly running your Python code in the Jupyter notebook.

Here is a full description of the issue.

IOPub data rate exceeded. The notebook server will temporarily stop sending output to the client in order to avoid crashing it. To change this limit, set the config variable `--NotebookApp.iopub_data_rate_limit`. Current values: NotebookApp.iopub_data_rate_limit=1000000.0 (bytes/sec) NotebookApp.rate_limit_window=3.0 (secs)

The issue has happened because your program or code has breached the maximum allowed IO data transfer rate. You can easily get around it by updating a few configuration entries.

Option 1

You can create a Jupyter notebook configuration

$ jupyter notebook --generate-config

This will create a new configuration file with all the default attributes commented out.

Try updating the below attributes from the default of 1000000 to say 10000000

- NotebookApp.iopub_data_rate_limitFloat : This attribute quanitifies (bytes/sec) the maximum rate at which stream output can be sent on iopub before they are limited.

Option 2

Re-open Jupyter notebook with a command-line argument as follows. Try running the below from Command or Terminal

jupyter notebook --NotebookApp.iopub_data_rate_limit=10000000000

When the issue happens?

- You are trying to print a huge data to the notebook.

Example: printing in big loop, help(np)

2. You are drying to display a large picture in the notebook.

Example:

from IPython.display import Image Image(filename='path_to_image/image.png')

At BytePro, our focus is as much on emerging technologies as it is on programming and designing skills. We believe in the free sharing of knowledge. We aim to provide in-depth information on topics ranging from Computer Science, Programming, to Science, and Technology.

IOPub data rate exceeded.

The notebook server will temporarily stop sending output

to the client in order to avoid crashing it.

To change this limit, set the config variable

--NotebookApp.iopub_data_rate_limit.

Current values:

NotebookApp.iopub_data_rate_limit=1000000.0 (bytes/sec)

NotebookApp.rate_limit_window=3.0 (secs)

Ankur Ankan

2,9082 gold badges22 silver badges38 bronze badges

asked Jun 4, 2018 at 22:48

1

An IOPub error usually occurs when you try to print a large amount of data to the console. Check your print statements — if you’re trying to print a file that exceeds 10MB, its likely that this caused the error. Try to read smaller portions of the file/data.

I faced this issue while reading a file from Google Drive to Colab.

I used this link https://colab.research.google.com/notebook#fileId=/v2/external/notebooks/io.ipynb

and the problem was in this block of code

# Download the file we just uploaded.

#

# Replace the assignment below with your file ID

# to download a different file.

#

# A file ID looks like: 1uBtlaggVyWshwcyP6kEI-y_W3P8D26sz

file_id = 'target_file_id'

import io

from googleapiclient.http import MediaIoBaseDownload

request = drive_service.files().get_media(fileId=file_id)

downloaded = io.BytesIO()

downloader = MediaIoBaseDownload(downloaded, request)

done = False

while done is False:

# _ is a placeholder for a progress object that we ignore.

# (Our file is small, so we skip reporting progress.)

_, done = downloader.next_chunk()

downloaded.seek(0)

#Remove this print statement

#print('Downloaded file contents are: {}'.format(downloaded.read()))

I had to remove the last print statement since it exceeded the 10MB limit in the notebook — print('Downloaded file contents are: {}'.format(downloaded.read()))

Your file will still be downloaded and you can read it in smaller chunks or read a portion of the file.

ccpizza

28.5k18 gold badges162 silver badges163 bronze badges

answered Jul 31, 2018 at 8:15

SrishtiSrishti

3861 silver badge9 bronze badges

The above answer is correct, I just commented the print statement and the error went away. just keeping it here so someone might find it useful. Suppose u are reading a csv file from google drive just import pandas and add pd.read_csv(downloaded) it will work just fine.

file_id = 'FILEID'

import io

from googleapiclient.http import MediaIoBaseDownload

request = drive_service.files().get_media(fileId=file_id)

downloaded = io.BytesIO()

downloader = MediaIoBaseDownload(downloaded, request)

done = False

while done is False:

# _ is a placeholder for a progress object that we ignore.

# (Our file is small, so we skip reporting progress.)

_, done = downloader.next_chunk()

downloaded.seek(0)

pd.read_csv(downloaded);

answered Oct 12, 2018 at 19:25

Maybe this will help..

from via sv1997

IOPub Error on Google Colaboratory in Jupyter Notebook

IoPub Error is occurring in Colab because you are trying to display the output on the console itself(Eg. print() statements) which is very large.

The IoPub Error maybe related in print function.

So delete or annotate the print function. It may resolve the error.

answered Aug 19, 2020 at 5:32

DIGMASTER97DIGMASTER97

1892 silver badges11 bronze badges

%cd darknet

!sed -i 's/OPENCV=0/OPENCV=1/' Makefile

!sed -i 's/GPU=0/GPU=1/' Makefile

!sed -i 's/CUDNN=0/CUDNN=1/' Makefile

!sed -i 's/CUDNN_HALF=0/CUDNN_HALF=1/' Makefile

!apt update

!apt-get install libopencv-dev

its important to update your make file. and also, keep your input file name correct

answered Apr 6, 2021 at 20:28

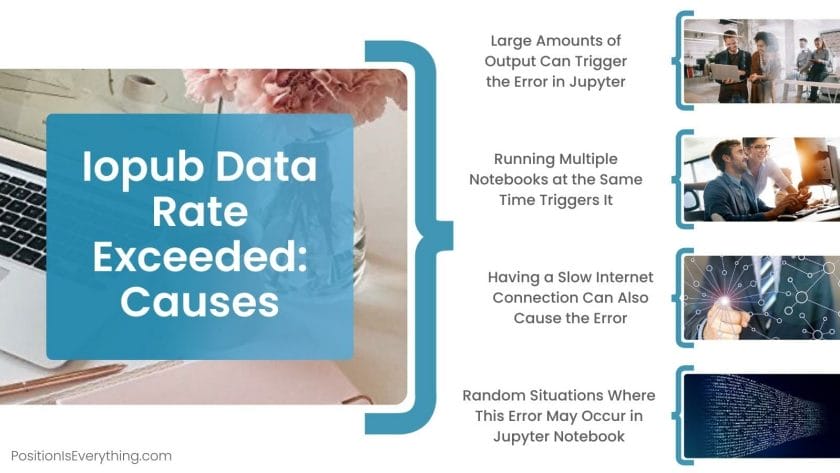

Iopub data rate exceeded is an error message you may come across when the Jupyter notebook is sending too much data to the output channel.

This can happen when there is a large amount of output, such as when printing many lines of text or displaying a large image. In this post, you will learn why it occurs and how to resolve it.

Contents

- Why Am I Getting the Iopub Data Rate Exceeded in Jupyter Notebook?

- – Large Amounts of Output Can Trigger the Error in Jupyter

- – Running Multiple Notebooks at the Same Time Triggers It

- – Having a Slow Internet Connection Can Also Cause the Error

- – Random Situations Where This Error May Occur in Jupyter Notebook

- What Are the Best Solutions to This Error in Jupyter?

- – Consider Decreasing the Amount of Data Sent to the Output

- – Close Unnecessary Notebooks To Alleviate This Error

- – Other Ways You Can Solve This Problem With Jupyter Notebook

- Conclusion

Why Am I Getting the Iopub Data Rate Exceeded in Jupyter Notebook?

The reason you are getting the iopub data rate exceeded” error message in Jupyter Notebook is that the amount of data being sent to the output channel exceeds the maximum data rate limit set by the notebook. It can also happen when running multiple notebooks at the same time.

This happens especially when there is a large amount of output, like when printing many lines of text or displaying a large image. Another common cause of the error is running multiple notebooks at the same time.

The reason is that it can put a strain on the system and cause the data rate limit to be exceeded. In addition, a slow internet connection can also cause this error, as the notebook may have difficulty sending data to the output channel.

– Large Amounts of Output Can Trigger the Error in Jupyter

When running a Jupyter Notebook, the output generated by the code cells is sent to the output channel. This output can include text, images, plots, and other types of data. The amount of data being sent to the output channel is known as the data rate.

When the amount of output generated by the code cells exceeds the maximum data rate limit set by the notebook, then the iopub data rate exceeded error message appears. This is because the output channel is not able to handle the large amount of data being sent to it.

There are several ways that large amounts of output can be generated in a Jupyter Notebook. One of the common ways is using the “print” statement to output large amounts of text. For example, if a code cell contains a loop that prints a large number of lines, this can cause the data rate limit to be exceeded.

Another way you can generate large amounts of output is by displaying large images or plots. If a code cell contains a command to display a large image or plot, this can cause the data rate limit to be exceeded.

In addition, some computations and data manipulation can also generate a lot of output data, especially when working with large data sets.

It is important to remember that the data rate limit can be exceeded not only by the output you see in the notebook but also by the data sent to the background. This includes large data sets and even logging information.

– Running Multiple Notebooks at the Same Time Triggers It

If you run multiple Jupyter Notebooks at the same time, this can put a strain on the system and cause the error to appear. This is because each notebook uses a certain amount of system resources.

These include memory and CPU, and when multiple notebooks are running at the same time, these resources can become overloaded.

When a notebook runs, it uses some system resources to handle the output data. Therefore, when multiple notebooks are running, it can cause the system resources to be overused, leading to the mentioned error.

This is because running multiple notebooks simultaneously can also cause conflicts between them, such as when they try to access the same resources or files. This can further contribute to the error.

Another reason is that when multiple notebooks are running at the same time, they may be sending output to the same output channel. As a result, it can cause the data rate limit to be exceeded.

– Having a Slow Internet Connection Can Also Cause the Error

A slow internet connection can also cause this error in Jupyter Notebook. This is because when the notebook is sending output to the output channel, it needs to have a stable and fast internet connection in order to send the data quickly and efficiently.

When the internet connection is slow, it can take longer for the data to be sent to the output channel. This can cause the data rate limit to be exceeded since the notebook is not able to send the data fast enough. As well, the data might get lost or corrupt during the transmission.

Another way that a slow internet connection can lead to this error is if the notebook is trying to access large files or data sets that are stored on a remote server. This is especially true when dealing with data science projects. If the internet connection is slow, it may take longer to download these files, which can further contribute to the error.

– Random Situations Where This Error May Occur in Jupyter Notebook

The iopub data rate exceeded error can occur in various situations when using Jupyter Notebook. Below are some common scenarios where you may come across this error:

- Iopub data rate exceeded. google colab: The error can also occur when using Google Colab, which is a cloud-based platform for running Jupyter Notebooks.

- Iopub data rate exceeded. jupyter lab: This error can also occur when using Jupyter Lab, which is an interactive development environment for Jupyter Notebooks.

- Iopub data rate exceeded pycharm: The error can also occur when using PyCharm, which is an integrated development environment (IDE) for Python. PyCharm has built-in Jupyter Notebook support, and the error can also occur when running code cells that generate large amounts of output or working with large data sets.

- Iopub data rate exceeded anaconda: The error can occur when using Anaconda, which is a distribution of Python and R for scientific computing and data science. Anaconda includes Jupyter Notebook as one of its components, and the error can occur while running code cells that generate large amounts of output or while working with large data sets.

- Iopub data rate exceeded mac: This error can occur on a Mac when running Jupyter Notebook, whether it is through Anaconda or other means.

- Iopub message rate exceeded kaggle: The error can also be triggered when using Jupyter Notebooks on Kaggle, which is a platform for data science and machine learning competitions.

- iopub_data_rate_limit colab: In Google Colab, you can use the notebookapp.iopub_data_rate_limit configuration parameter in the same way as it is in Jupyter Notebook. It allows you to increase the data rate limit of the iopub channel to a higher value.

- Iopub data rate exceeded. the notebook server will temporarily stop sending output colab: This error message indicates that the Google Colab server is temporarily halting the output to prevent further data rate exceeding.

What Are the Best Solutions to This Error in Jupyter?

The best solutions to the iopub data rate exceeded error include updating a few configuration entries to increase the amount of data sent to the output channel. The most important configuration entry to consider is the notebookapp.iopub_data_rate_limit in the Jupyter Notebooks.

This configuration entry controls the maximum data rate at which the output channel (iopub) can handle data. By increasing the value of this parameter, you can increase the amount of data that can be sent to the output channel before the error occurs.

Additionally, you can update other configuration entries, such as notebookapp.rate_limit_window. This parameter controls the window of time over which the rate limit applies – setting it to a higher value can also help to solve this error.

That said, it is important to note that increasing the values of these configuration entries may impact the notebook’s performance. Therefore it is advisable you check the system resources usage before increasing these values.

– Consider Decreasing the Amount of Data Sent to the Output

One of the simplest solutions to this error is to decrease the amount of output generated by the code cells. You can accomplish this by removing unnecessary print statements, reducing the size of images or plots displayed, or optimizing the code to generate less output.

For instance, if a code cell contains a loop that prints a large number of lines, this can cause the data rate limit to be exceeded. By decreasing the number of print statements or removing them altogether, the amount of output will be decreased, which will go a long way in avoiding this error.

Similarly, by reducing the size of the image or plot, or displaying it in a lower resolution, the amount of output will be decreased. As a result, you will avoid the error altogether.

– Close Unnecessary Notebooks To Alleviate This Error

Closing unnecessary notebooks that you are not currently using can help to alleviate this problem. When you close a notebook, it stops using system resources, and this can help to free up resources that can be used by other notebooks.

For example, if you have several notebooks open and are only actively working on one of them, closing the other notebooks can help decreasing the amount of resources being used. This, in return, can help to prevent the error from occurring. You should keep in mind that this is one of the easiest ways of avoiding this error.

– Other Ways You Can Solve This Problem With Jupyter Notebook

Another way you can solve this error is by optimizing the internet connection by closing unnecessary applications or programs that may be using the internet connection. Also, to avoid this error, you should consider reducing the size of the data sets you use in the notebook.

Conclusion

In this post, you have learned the various causes and solutions to the iopub data rate exceeded error in Jupyter Notebook. To recap, here is a summary of the article:

- The error indicates that your code or program has breached the set maximum data transfer rate.

- This error arises from various factors, such as large amounts of output generated by code cells and running multiple notebooks at the same time.

- Also, you can trigger the error when using a slow internet connection, working with large data sets, and doing heavy computations.

- Solutions include increasing the limit on the iopub data rate, decreasing the amount of output generated by code cells, and closing unnecessary notebooks.

- You should also consider optimizing internet connection and reducing the size of data sets.

Now that you know the causes and solutions to this error, you can effectively handle it whenever it arises.

- Author

- Recent Posts

Your Go-To Resource for Learn & Build: CSS,JavaScript,HTML,PHP,C++ and MYSQL. Meet The Team

![[Fixed] modulenotfounderror: no module named ‘_bz2](https://www.pythonpool.com/wp-content/uploads/2023/05/modulenotfounderror-no-module-named-_bz2-300x157.webp)

![[Fixed] Cannot Set verify_mode to cert_none When check_hostname is Enabled](https://www.pythonpool.com/wp-content/uploads/2023/05/cannot-set-verify_mode-to-cert_none-when-check_hostname-is-enabled-300x157.webp)