Освоив работу с цветовыми фильтрами приступим к изучению ещё одного важного инструмента машинного зрения — функции выделения контуров.

Контур объекта — это его видимый край, который отделяет объект от фона. В действительности, большинство методов анализа изображений работают именно с контурами, а не с пикселями как таковыми. Совокупность методов работы с контурами называется контурным анализом.

Возьмём в качестве подопытного изображения что-нибудь такое, где есть ярко выраженные вложенные контуры, какой-нибудь диск. И попробуем применить к нему стандартные функции OpenCV для поиска и визуализации контуров объектов.

1. Функция OpenCV для поиска контуров findContours

В OpenCV для поиска контуров имеется функцией findContours, которая имеет вид:

findContours( кадр, режим_группировки, метод_упаковки [, контуры[, иерархия[, сдвиг]]])

кадр — должным образом подготовленная для анализа картинка. Это должно быть 8-битное изображение. Поиск контуров использует для работы монохромное изображение, так что все пиксели картинки с ненулевым цветом будут интерпретироваться как 1, а все нулевые останутся нулями. На уроке про поиск цветных объектов была точно такая же ситуация.

режим_группировки — один из четырех режимов группировки найденных контуров:

- CV_RETR_LIST — выдаёт все контуры без группировки;

- CV_RETR_EXTERNAL — выдаёт только крайние внешние контуры. Например, если в кадре будет пончик, то функция вернет его внешнюю границу без дырки.

- CV_RETR_CCOMP — группирует контуры в двухуровневую иерархию. На верхнем уровне — внешние контуры объекта. На втором уровне — контуры отверстий, если таковые имеются. Все остальные контуры попадают на верхний уровень.

- CV_RETR_TREE — группирует контуры в многоуровневую иерархию.

метод_упаковки — один из трёх методов упаковки контуров:

- CV_CHAIN_APPROX_NONE — упаковка отсутствует и все контуры хранятся в виде отрезков, состоящих из двух пикселей.

- CV_CHAIN_APPROX_SIMPLE — склеивает все горизонтальные, вертикальные и диагональные контуры.

- CV_CHAIN_APPROX_TC89_L1,CV_CHAIN_APPROX_TC89_KCOS — применяет к контурам метод упаковки (аппроксимации) Teh-Chin.

контуры — список всех найденных контуров, представленных в виде векторов;

иерархия — информация о топологии контуров. Каждый элемент иерархии представляет собой сборку из четырех индексов, которая соответствует контуру[i]:

- иерархия[i][0] — индекс следующего контура на текущем слое;

- иерархия[i][1] — индекс предыдущего контура на текущем слое:

- иерархия[i][2] — индекс первого контура на вложенном слое;

- иерархия[i][3] — индекс родительского контура.

сдвиг — величина смещения точек контура.

2. Функция OpenCV для отображения контуров drawContours

Полученные с помощью функции findContours контуры хорошо бы каким-то образом нарисовать в кадре. Машине это не нужно, зато нам это поможет лучше понять как выглядят найденные алгоритмом контуры. Поможет в этом ещё одна полезная функция — drawContours.

drawContours( кадр, контуры, индекс, цвет[, толщина[, тип_линии[, иерархия[, макс_слой[, сдвиг]]]]])

кадр — кадр, поверх которого мы будем отрисовывать контуры;

контуры — те самые контуры, найденные функцией findContours;

индекс — индекс контура, который следует отобразить. -1 — если нужно отобразить все контуры;

цвет — цвет контура;

толщина — толщина линии контура;

тип_линии — тип соединения точек вектора;

иерархия — информация об иерархии контуров;

макс_слой — индекс слоя, который следует отображать. Если параметр равен 0, то будет отображен только выбранный контур. Если параметр равен 1, то отобразится выбранный контур и все его дочерние контуры. Если параметр равен 2, то отобразится выбранный контур, все его дочерние и дочерние дочерних! И так далее.

сдвиг — величина смещения точек контура.

3. Программа поиска и отображения контуров

Теперь напишем программу, которая будет искать контуры предметов в кадре с пончиком. Прежде всего, следует подготовить изображение. Помним, что функция findContours работает с монохромной картинкой, и нам потребуется обработать наш пончик цветовым фильтром, чтобы сам пончик стал абсолютно белым, а фон — чёрным.

import sys

import numpy as np

import cv2 as cv

# параметры цветового фильтра

hsv_min = np.array((2, 28, 65), np.uint8)

hsv_max = np.array((26, 238, 255), np.uint8)

if __name__ == '__main__':

print(__doc__)

fn = 'image.jpg' # путь к файлу с картинкой

img = cv.imread(fn)

hsv = cv.cvtColor( img, cv.COLOR_BGR2HSV ) # меняем цветовую модель с BGR на HSV

thresh = cv.inRange( hsv, hsv_min, hsv_max ) # применяем цветовой фильтр

# ищем контуры и складируем их в переменную contours

_, contours, hierarchy = cv.findContours( thresh.copy(), cv.RETR_TREE, cv.CHAIN_APPROX_SIMPLE)

# отображаем контуры поверх изображения

cv.drawContours( img, contours, -1, (255,0,0), 3, cv.LINE_AA, hierarchy, 1 )

cv.imshow('contours', img) # выводим итоговое изображение в окно

cv.waitKey()

cv.destroyAllWindows()В результате работы программы мы получим пончики с выделенными внешними и вложенными границами.

Теперь разберёмся как параметры кадр и макс_слой влияют на отображаемые контуры.

Алгоритм findContours нашел у пончиков четыре замкнутых контура. Если вывести иерархию в консоль с помощью обычного print, то мы увидим следующую таблицу:

[ 2 -1 1 -1] - внешний контур первого бублика [-1 -1 -1 0] - дырка первого бублика [-1 0 3 -1] - внешний контур второго бублика [-1 -1 -1 2] - дырка второго бублика

На верхнем уровне иерархии имеется два контура. Эти контуры легко вычислить по значению четвертой величины = -1, которая отвечает за указатель на родительский контур. Также имеются два вложенных контура. Один вложен в первый внешний контур, а второй вложен во второй внешний контур.

В программе параметр контур = -1, следовательно drawContours должна вывести все четыре найденных контура. Но есть ещё второй параметр макс_слой, как он будет влиять на вывод? Посмотрим его поведение на анимации:

Примечание! На верхнем бегунке contour = 0, хотя мы почему-то говорим про -1. Это не ошибка! На самом деле в этом положении бегунка в функцию поступает параметр контур = -1. Это несоответствие возникло из-за особенностей бегунка в OpenCV — он не может принимать отрицательные значения, поэтому в программе из значения бегунка contour каждый раз принудительно вычитается единица.

Вернёмся к параметрам.

При макс_слой = 0 отображается первый попавшийся контур на верхнем уровне иерархии. Такая комбинация параметров вообще нетипичная и бесполезная, она эквивалентна комбинации контур = 0, макс_слой=0.

При макс_слой = 1 отобразятся все контуры на самом верхнем уровне иерархии — это уже полезно. Так мы сможем увидеть все бублики в кадре.

Наконец, при макс_слой = 2 отобразятся контуры на верхнем уровне и все контуры на следующем уровне — то есть дырки.

Теперь наоборот, зафиксируем макс_слой = 0, и будем менять контур в диапазоне от 0 до 3.

Тут опять видна путающая всех первая комбинация: контур = -1, макс_слой = 0, игнорируем её. Но затем всё становится логично. Как и ожидалось, мы просто перебираем контуры на всех слоях, внутренних и внешних.

Чтобы самостоятельно поэкспериментировать с параметрами можно написать программу, которая добавит в окно два бегунка для изучаемых параметров. Подобное мы делали на уроке про цветовые фильтры.

import sys

import numpy as np

import cv2 as cv

hsv_min = np.array((2, 28, 65), np.uint8)

hsv_max = np.array((26, 238, 255), np.uint8)

if __name__ == '__main__':

fn = 'donuts.jpg'

img = cv.imread(fn)

hsv = cv.cvtColor( img, cv.COLOR_BGR2HSV )

thresh = cv.inRange( hsv, hsv_min, hsv_max )

_, contours0, hierarchy = cv.findContours( thresh.copy(), cv.RETR_TREE, cv.CHAIN_APPROX_SIMPLE)

index = 0

layer = 0

def update():

vis = img.copy()

cv.drawContours( vis, contours0, index, (255,0,0), 2, cv.LINE_AA, hierarchy, layer )

cv.imshow('contours', vis)

def update_index(v):

global index

index = v-1

update()

def update_layer(v):

global layer

layer = v

update()

update_index(0)

update_layer(0)

cv.createTrackbar( "contour", "contours", 0, 7, update_index )

cv.createTrackbar( "layers", "contours", 0, 7, update_layer )

cv.waitKey()

cv.destroyAllWindows()К размышлению

Теперь мы можем находить контуры и отображать их прямо в картинке. Имея готовые контуры можно приступить к дальнейшему анализу, включая: поиск геометрических фигур, вычисление их координат и положения, детектирование лиц и жестов.

На следующем уроке начнем с простого — займемся поиском прямоугольников в кадре и вычислением угла их наклона.

Оглавление.

На прошлом уроке мы изучили некоторые способы поиска областей интереса на изображении. Напомню, что мы делали:

-

пытались найти по цвету (чаще всего так делать не надо);

-

пытались найти круглый знак посредством функции HoughCircles (иногда работает);

-

а еще мы изучили морфологические операции (открытие закрытие).

Сегодняшний урок будет более глубоко посвящен работе с контурами, так как часто контур помогает выдели фичи на изображения, а так же области интересов (благодаря контуру, мы можем охватить форму объекта).

Для начала вспомним, как находить контуры:

import cv2

import numpy as np

my_photo = cv2.imread('DSCN1311.JPG')

filterd_image = cv2.medianBlur(my_photo,7)

img_grey = cv2.cvtColor(filterd_image,cv2.COLOR_BGR2GRAY)

#set a thresh

thresh = 100

#get threshold image

ret,thresh_img = cv2.threshold(img_grey, thresh, 255, cv2.THRESH_BINARY)

#find contours

contours, hierarchy = cv2.findContours(thresh_img, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

#create an empty image for contours

img_contours = np.uint8(np.zeros((my_photo.shape[0],my_photo.shape[1])))

cv2.drawContours(img_contours, contours, -1, (255,255,255), 1)

cv2.imshow('origin', my_photo) # выводим итоговое изображение в окно

cv2.imshow('res', img_contours) # выводим итоговое изображение в окно

cv2.waitKey()

cv2.destroyAllWindows()

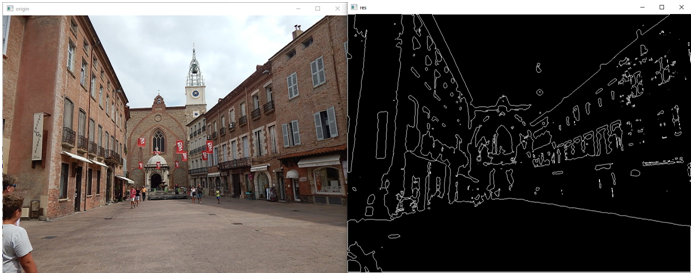

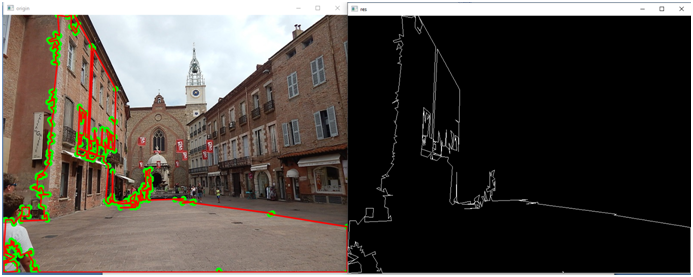

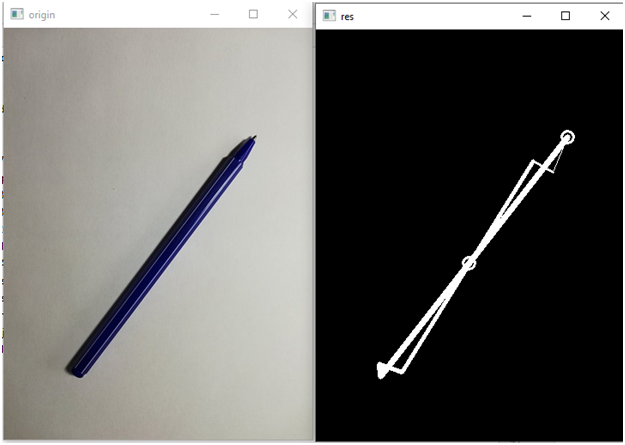

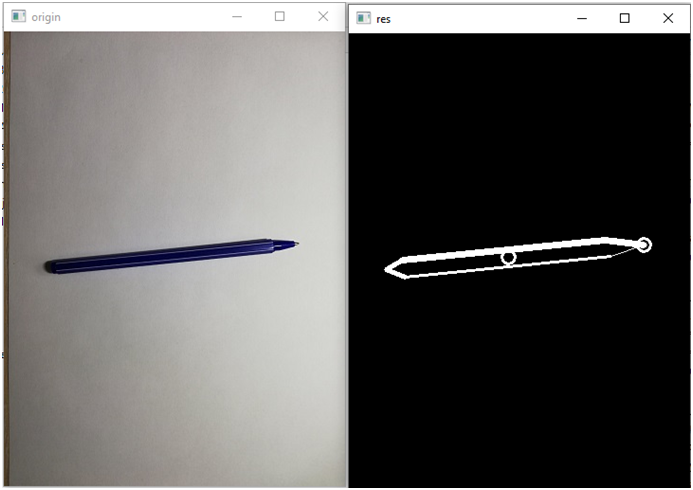

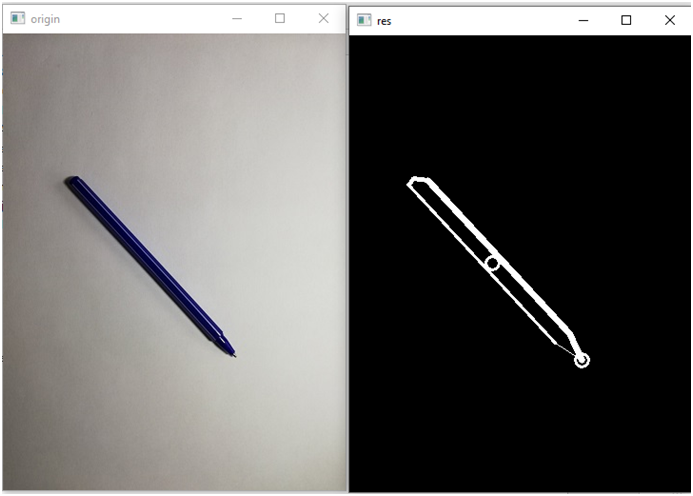

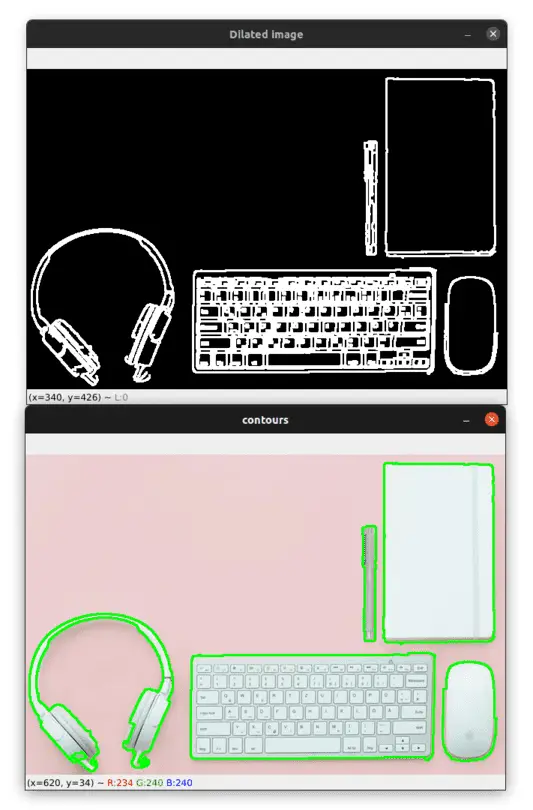

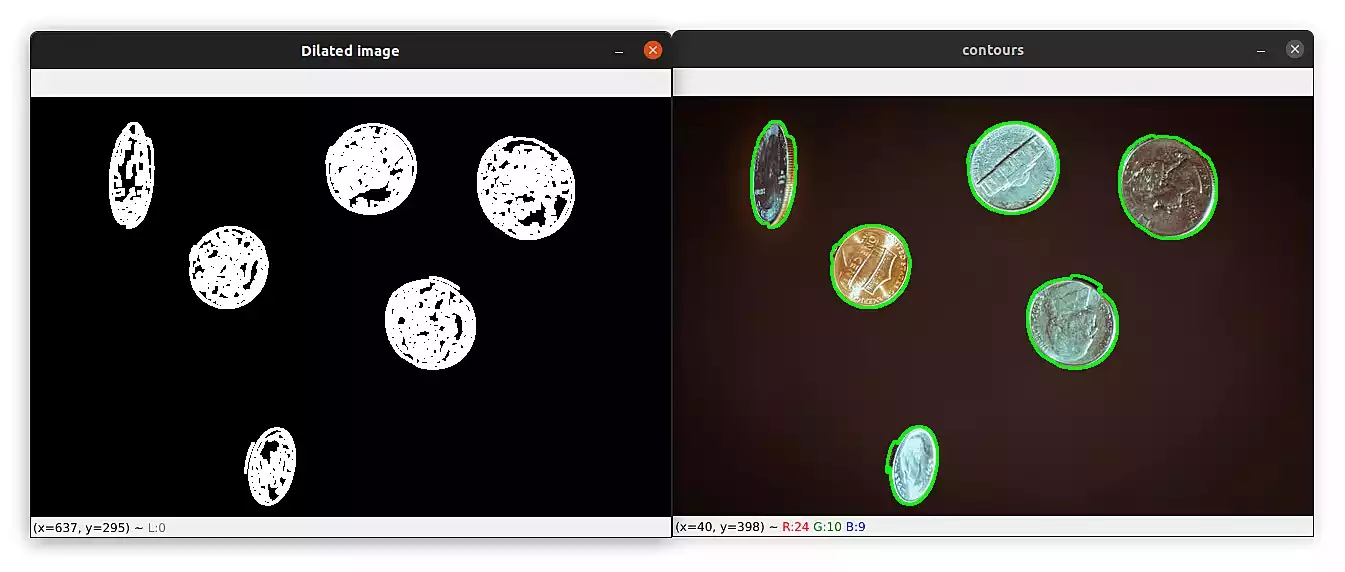

Обратите внимание, что перед выделением контуров мы используем фильтрацию. Вот что у нас получилось:

Без фильтрации у нас бы получилось вот что (для сравнения, справа без фильтра, слева с фильтром):

Теперь посмотрим, а что именно у нас возвращает findContours и как с этим работать:

contours, hierarchy = cv2.findContours(thresh_img, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

print(type(contours),type(hierarchy))Мы получили вывод:

<class ‘tuple’> <class ‘numpy.ndarray’>

Таким образом, сам контур – это обыкновенный тьюпл, а второе возвращенное значение массив numpy. Если мы посмотрим этот тьюпл отладчиком, то увидим, что элементами этого тьюпла являются массив numpy:

Иными словами, функция возвращает целое множество контуров. По идее, можно работать с каждым из контуров по отдельности. Давайте, например, выведем на экран четвертый (он на самом деле будет под номером 3, считаем же с нуля) контур:

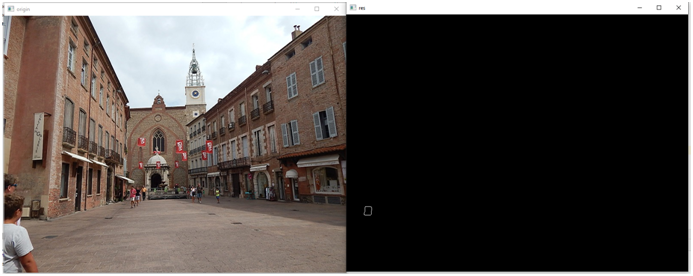

img_contours = np.uint8(np.zeros((my_photo.shape[3],my_photo.shape[1])))Вот что мы увидим на картинке:

Можно вывести сразу несколько контуров:

sel_countours=[]

sel_countours.append(contours[3])

sel_countours.append(contours[7])

sel_countours.append(contours[8])

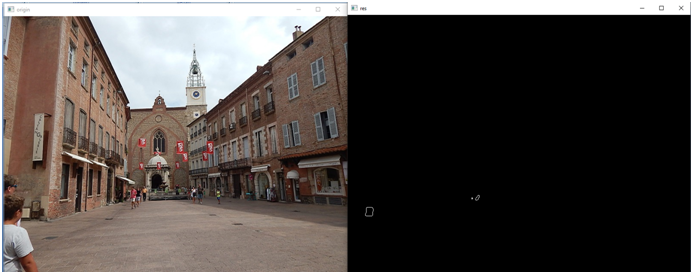

cv2.drawContours(img_contours, sel_countours, -1, (255,255,255), 1)Вот что мы увидим:

Найдем самый большой контур:

max=0

sel_countour=None

for countour in contours:

if countour.shape[0]>max:

sel_countour=countour

max=countour.shape[0]

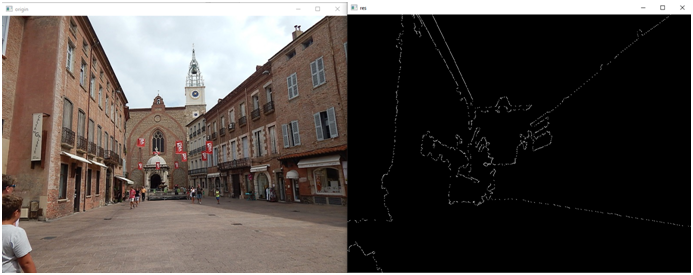

cv2.drawContours(img_contours, [sel_countour], -1, (255,255,255), 1)Смотрим:

Надо сказать, что контур может храниться как в виде точек, так и в виде отрезков, в зависимости от установлено параметра аппроксимации:

contours, hierarchy = cv2.findContours(thresh_img, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)В нашем случае установлен Simple, значит, контур храниться в виде отрезков, если мы нарисуем по точкам, то контура не получиться:

for point in sel_countour:

y=int(point[0][1])

x=int(point[0][0])

img_contours[y,x]=255Смотрим:

Но если вы укажете функции findContours что надо искать контуры без аппроксимации:

contours, hierarchy = cv2.findContours(thresh_img, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)То контур будет как на предыдущей картинке.

С другой стороны, если у вас аппроксимация включена, то вы можете нарисовать контур, соединив точки линиями:

last_point=None

for point in sel_countour:

curr_point=point[0]

if not(last_point is None):

x1=int(last_point[0])

y1=int(last_point[1])

x2=int(curr_point[0])

y2=int(curr_point[1])

cv2.line(img_contours, (x1, y1), (x2, y2), 255, thickness=1)

last_point=curr_pointПолучится то же самое, что и на первой картинке.

И так, функция findContours возвращает сгруппированные наборы точек, которые являются точками контура (или концами отрезков контура, в зависимости от типа аппроксимации).

Полученный контур мы можем и далее аппроксимировать:

import cv2

import numpy as np

import os

img = cv2.imread("DSCN1311.JPG")

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

thresh = 100

#get threshold image

ret,thresh_img = cv2.threshold(gray, thresh, 255, cv2.THRESH_BINARY)

# find contours without approx

contours,_ = cv2.findContours(thresh_img,cv2.RETR_TREE,cv2.CHAIN_APPROX_NONE)

max=0

sel_countour=None

for countour in contours:

if countour.shape[0]>max:

sel_countour=countour

max=countour.shape[0]

# calc arclentgh

arclen = cv2.arcLength(sel_countour, True)

# do approx

eps = 0.0005

epsilon = arclen * eps

approx = cv2.approxPolyDP(sel_countour, epsilon, True)

# draw the result

canvas = img.copy()

for pt in approx:

cv2.circle(canvas, (pt[0][0], pt[0][1]), 7, (0,255,0), -1)

cv2.drawContours(canvas, [approx], -1, (0,0,255), 2, cv2.LINE_AA)

img_contours = np.uint8(np.zeros((img.shape[0],img.shape[1])))

cv2.drawContours(img_contours, [approx], -1, (255,255,255), 1)

cv2.imshow('origin', canvas) # выводим итоговое изображение в окно

cv2.imshow('res', img_contours) # выводим итоговое изображение в окно

cv2.waitKey()

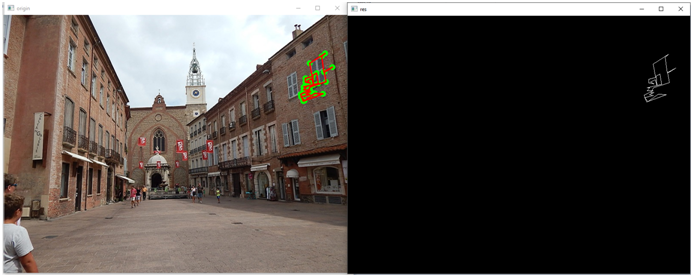

cv2.destroyAllWindows()Давайте посмотрим, что получиться:

Мы можем управлять точность аппроксимации, меняя значение переменной eps. Поставим, например, вместо 0.0005 значение 0.005 и картинка будет уже совсем другой:

А теперь более внимательно рассмотрим кусок кода, ответственный за аппроксимацию:

# calc arclentgh

arclen = cv2.arcLength(sel_countour, True)

# do approx

eps = 0.0005

epsilon = arclen * eps

approx = cv2.approxPolyDP(sel_countour, epsilon, True)Функция arcLength возвращает длину дуги контура. Давайте попробуем посмотреть длины разных контуров. Только давайте сначала отсортируем контуры в порядке уменьшения их длин. Для этого определим кастмную сортировочную функцию:

def custom_sort(countour):

return -countour.shape[0]Теперь мы можем отсортировать контуры:

contours=list(contours)

contours.sort(key=custom_sort)Самый длинный контур будет первым:

sel_countour=contours[0]

# calc arclentgh

arclen = cv2.arcLength(sel_countour, True)

print(arclen)Остальные контуры будут поменьше, например, вот контур под индексом 5:

Идем дальше. Получив длину дуги контура, мы вычисляем так называемый эпсилон – параметр, характеризующий точность аппроксимации. В качестве критерия используется максимальное расстояние между исходной кривой и ее аппроксимацией.

Аппроксимируемый контур – это, по сути те же точки, соединенные отрезками, так что его можно вывести и так:

last_point=None

for point in approx:

curr_point=point[0]

if not(last_point is None):

x1=int(last_point[0])

y1=int(last_point[1])

x2=int(curr_point[0])

y2=int(curr_point[1])

cv2.line(img_contours, (x1, y1), (x2, y2), 255, thickness=1)

last_point=curr_pointИ так, теперь мы знаем, что представляет собой полученный контур – это отрезки. Мы можем даже аппроксимировать эти отрезки, получив более грубый контур, избавиться тем самым от мелких деталей. Но что делать дальше? Как я уже писал в части 4, контур можно превратить в граф или в геометрические примитивы, тем самым описав его инвариантно к смещению, повороту и даже масштабированию.

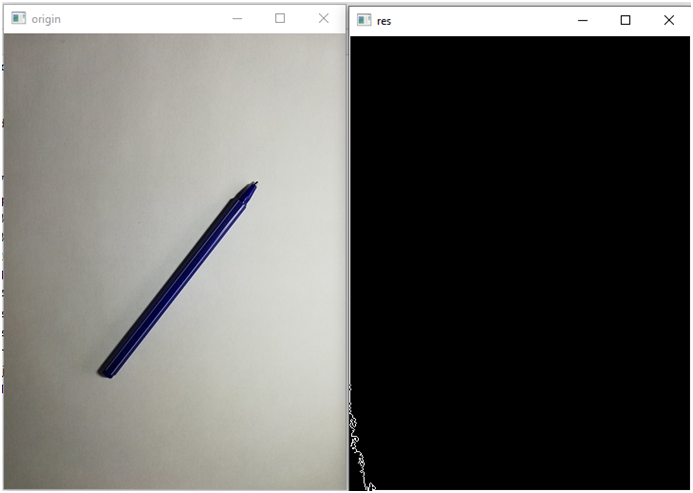

Сейчас мы попробуем создать такое инвариантное описание объекта. Пусть это будет обыкновенная шариковая ручка:

Логично предположить, что надо работать с самым длинным контуром. Найдем, его, это мы уже умеем:

Нет, не угадали, придется перебирать. К счастью, контур оказался второй по длине:

contours,_ = cv2.findContours(thresh_img,cv2.RETR_TREE,cv2.CHAIN_APPROX_NONE)

contours=list(contours)

contours.sort(key=custom_sort)

sel_countour=contours[1]Аппроксимируем его:

Как оказалось, при значении eps=0.005 контур имеет всего 7 элементов:

eps = 0.005

epsilon = arclen * eps

approx = cv2.approxPolyDP(sel_countour, epsilon, True)

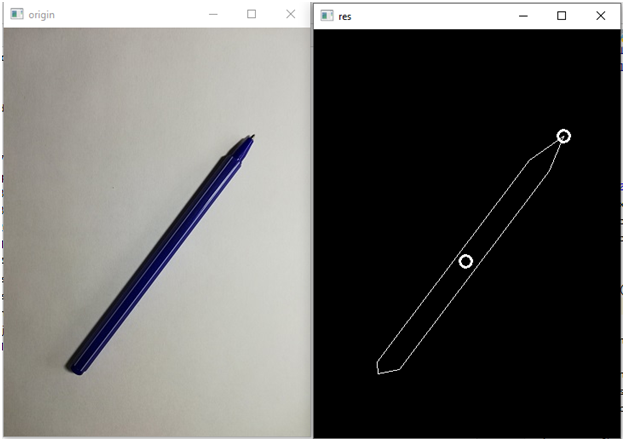

print(len(approx))Посмотрим, как будет выделен контур в других положениях:

В последнем случае мы получили, кстати, не 7, а 9 элементов. Короче, тут засада с тенью. В общем, надо как-то избавиться от мелких деталей. Но как? Поднять порог аппроксимации? Давайте сделаем 0.01:

Количество элементов стало 6. На других фотографиях, тоже кстати 6. Такой вот шестиугольник:

Теперь попробуем описать данный контур инвариантно. Можно сделать это двумя способами:

— углы между гранями контура;

— отношении длин сторон.

Оба способа будут инвариантны к смещению, повороту и масштабированию. Но вопрос: а с какой стороны считать? Один из вариантов, это найти центр контура и за начало взять самую удаленную от него точку. Как найти центр? Как среднюю координату всех точек контура.

sum_x=0.0

sum_y=0.0

for point in approx:

x = float(point[0][0])

y = float(point[0][1])

sum_x+=x

sum_y+=y

xc=sum_x/float(len((approx)))

yc=sum_y/float(len((approx)))Отобразим центр после вывода контура:

cv2.circle(img_contours, (int(xc), int(yc)), 7, (255,255,255), 2)Найдем точку, наиболее удаленную от центра:

max=0

beg_point=-1

for i in range(0,len(approx)):

point=approx[i]

x = float(point[0][0])

y = float(point[0][1])

dx=x-xc

dy=y-yc

r=math.sqrt(dx*dx+dy*dy)

if r>max:

max=r

beg_point=iОтрисуем ее:

point=approx[beg_point]

x = float(point[0][0])

y = float(point[0][1])

cv2.circle(img_contours, (int(x), int(y)), 7, (255,255,255), 2)Теперь просто обойдем контур по часовой стрелке, начиная с найденной точки. Для этого преобразуем координаты точек в полярные и отсортируем их по углу.

Полярные координаты вычислим вот такой вот функцией:

def get_polar_coordinates(x0,y0,x,y,xc,yc):

#Первая координата в полярных координатах - радиус

dx=xc-x

dy=yc-y

r=math.sqrt(dx*dx+dy*dy)

#Вторая координата в полярных координатах - узел, вычислим относительно начальной точки

dx0=xc-x0

dy0=yc-y0

r0 = math.sqrt(dx0 * dx0 + dy0 * dy0)

scal_mul=dx0*dx+dy0*dy

cos_angle=scal_mul/r/r0

sgn=dx0*dy-dx*dy0 #опредедляем, в какую сторону повернут вектор

angle=math.acos(cos_angle)

if sgn<0:

angle=2*math.pi-angle

return angle,rЗдесь мы задаем точку начала отчета, искомую точку и наш центр. Первая координата, это радиус, его мы вычислим по теореме Пифагора. Угол найдем через скалярное произведение. Тут, правда, есть засада. Через скалярное произведение мы вычислим угол между векторами, но не направление. Чтобы его вычислить, нам надо найти определить матрицы векторов. Знак это и будет направление вращения. Но нам надо не просто отрицательный угол, иначе при сортировке первая точка будет не начало отчета, а точка с самым отрицательным углом. Поэтому если направление в другую сторону, то вычтем этот угол из угла 2 пи радиан (360 градусов).

Если не понятно, то я сейчас наглядно продемонстрирую проблему. Но, давайте сначала отсортируем:

polar_coordinates=[]

x0=approx[beg_point][0][0]

y0=approx[beg_point][0][1]

print(x0,y0)

for point in approx:

x = int(point[0][0])

y = int(point[0][1])

angle,r=get_polar_coordinates(x0,y0,x,y,xc,yc)

polar_coordinates.append(((angle,r),(x,y)))

print(polar_coordinates)

polar_coordinates.sort(key=polar_sort)А потом нарисуем:

img_contours = np.uint8(np.zeros((img.shape[0],img.shape[1])))

size=len(polar_coordinates)

for i in range(1,size):

_ , point1=polar_coordinates[i-1]

_, point2 = polar_coordinates[i]

x1,y1=point1

x2,y2=point2

cv2.line(img_contours, (x1, y1), (x2, y2), 255, thickness=i)

_ , point1=polar_coordinates[size-1]

_, point2 = polar_coordinates[0]

x1,y1=point1

x2,y2=point2

cv2.line(img_contours, (x1, y1), (x2, y2), 255, thickness=size)Смотрим, что получилось:

Для того, чтобы увидеть обход, я первые линии сделал тонкими, но по мере обхода они становятся толще.

А теперь уберем из функции перевода в полярные координаты наши манипуляции с определением направления вращения:

def get_polar_coordinates(x0,y0,x,y,xc,yc):

#Первая координата в полярных координатах - радиус

dx=xc-x

dy=yc-y

r=math.sqrt(dx*dx+dy*dy)

#Вторая координата в полярных координатах - узел, вычислим относительно начальной точки

dx0=xc-x0

dy0=yc-y0

r0 = math.sqrt(dx0 * dx0 + dy0 * dy0)

scal_mul=dx0*dx+dy0*dy

cos_angle=scal_mul/r/r0

#sgn=dx0*dy-dx*dy0 #опредедляем, в какую сторону повернут вектор

angle=math.acos(cos_angle)

#if sgn<0:

# angle=2*math.pi-angle

return angle,rИ вот тогда какая ерунда получится:

Так что, вернем что закоментили на место и продолжим.

Приступим к инвариантному описанию. Углы между гранями контура. Здесь мы будем исходить из того, что углы положительны и меньше 180 градусов, то есть не будем делать тех манипуляций с определением направление. Хотя… лучше даже определить не углы а косинусы углов, они примут значения от 0 до 1. По сути, это уже будет обычный вектор, который мы можем подать на вход какого-нибудь алгоритма классификации, например, нейросеть.

И так, функция вычисления косинуса угла между гранями (!!!!!!!):

def get_cos_edges(edges):

dx1, dy1, dx2, dy2=edges

r1 = math.sqrt(dx1 * dx1 + dy1 * dy1)

r2 = math.sqrt(dx2 * dx2 + dy2 * dy2)

return (dx1*dx2+dy1*dy2)/r1/r2Обратите внимание, что в функцию мы задаем относительные координаты, а не абсолютные. И их нам надо вычислить, для этого напишем еще одну функцию:

def get_coords(item1, item2, item3):

_, point1 = item1

_, point2 = item2

_, point3 = item3

x1, y1 = point1

x2, y2 = point2

x3, y3 = point3

dx1=x1-x2

dy1=y1-y2

dx2=x3-x2

dy2=y3-y2

return dx1,dy1,dx2,dy2Ну, и собственно, код получения инвариантного описания:

coses=[]

coses.append(get_cos_edges(get_coords(polar_coordinates[size-1],polar_coordinates[0],polar_coordinates[1])))

for i in range(1,size-1):

coses.append(get_cos_edges(get_coords(polar_coordinates[i-1], polar_coordinates[i],polar_coordinates[i+1])))

coses.append(get_cos_edges(get_coords(polar_coordinates[size-2], polar_coordinates[size-1],polar_coordinates[0])))

print(coses)Запустим программу и посмотрим эти вектора для разных положений ручки:

Сформированный вектор:

[0.8435094506704439, -0.9679482843035412, -0.7475204740128089, 0.12575426475263257, -0.7530074822433576, -0.9513518107379842]

Посмотрим в другом положении:

Сформированный вектор:

[0.8997284651496198, -0.9738348113021638, -0.886281044605172, 0.6119832801209469, -0.9073303511520623, -0.9760783176138438]

Как видим, первые две цифр оказались близкие, третья чуть дальше, четвертая сильно изменилась, так же предпоследняя, а вот последняя тоже почти совпала.

Для чистоты эксперимента, еще в одном положении:

Вектор:

[0.8447017514267182, -0.968529494204698, -0.20124730714807806, -0.4685934718394871, -0.7702667523702886, -0.9517100095171195]

Видим аналогичную ситуацию.

Конечно, это не есть хорошо, что какие-то цифры вектора сильно «плывут» (опять тень мешает, будь она неладна). Это осложнит идентификацию. Но у нас еще есть другой вариант, который мы рассмотрим на следующем уроке. А сейчас, в заключение, урока, я приведу весь код примера:

import cv2

import numpy as np

import math

import os

img = cv2.imread("Samples/1.jpg")

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

thresh = 100

def custom_sort(countour):

return -countour.shape[0]

def polar_sort(item):

return item[0][0]

def get_cos_edges(edges):

dx1, dy1, dx2, dy2=edges

r1 = math.sqrt(dx1 * dx1 + dy1 * dy1)

r2 = math.sqrt(dx2 * dx2 + dy2 * dy2)

return (dx1*dx2+dy1*dy2)/r1/r2

def get_polar_coordinates(x0,y0,x,y,xc,yc):

#Первая координата в полярных координатах - радиус

dx=xc-x

dy=yc-y

r=math.sqrt(dx*dx+dy*dy)

#Вторая координата в полярных координатах - узел, вычислим относительно начальной точки

dx0=xc-x0

dy0=yc-y0

r0 = math.sqrt(dx0 * dx0 + dy0 * dy0)

scal_mul=dx0*dx+dy0*dy

cos_angle=scal_mul/r/r0

sgn=dx0*dy-dx*dy0 #опредедляем, в какую сторону повернут вектор

if cos_angle>1:

if cos_angle>1.0001:

raise Exception("Что-то пошло не так")

cos_angle=1

angle=math.acos(cos_angle)

if sgn<0:

angle=2*math.pi-angle

return angle,r

def get_coords(item1, item2, item3):

_, point1 = item1

_, point2 = item2

_, point3 = item3

x1, y1 = point1

x2, y2 = point2

x3, y3 = point3

dx1=x1-x2

dy1=y1-y2

dx2=x3-x2

dy2=y3-y2

return dx1,dy1,dx2,dy2

#get threshold image

ret,thresh_img = cv2.threshold(gray, thresh, 255, cv2.THRESH_BINARY)

# find contours without approx

contours,_ = cv2.findContours(thresh_img,cv2.RETR_TREE,cv2.CHAIN_APPROX_NONE)

contours=list(contours)

contours.sort(key=custom_sort)

sel_countour=contours[1]

# calc arclentgh

arclen = cv2.arcLength(sel_countour, True)

# do approx

eps = 0.01

epsilon = arclen * eps

approx = cv2.approxPolyDP(sel_countour, epsilon, True)

sum_x=0.0

sum_y=0.0

for point in approx:

x = float(point[0][0])

y = float(point[0][1])

sum_x+=x

sum_y+=y

xc=sum_x/float(len((approx)))

yc=sum_y/float(len((approx)))

max=0

beg_point=-1

for i in range(0,len(approx)):

point=approx[i]

x = float(point[0][0])

y = float(point[0][1])

dx=x-xc

dy=y-yc

r=math.sqrt(dx*dx+dy*dy)

if r>max:

max=r

beg_point=i

polar_coordinates=[]

x0=approx[beg_point][0][0]

y0=approx[beg_point][0][1]

for point in approx:

x = int(point[0][0])

y = int(point[0][1])

angle,r=get_polar_coordinates(x0,y0,x,y,xc,yc)

polar_coordinates.append(((angle,r),(x,y)))

polar_coordinates.sort(key=polar_sort)

img_contours = np.uint8(np.zeros((img.shape[0],img.shape[1])))

size=len(polar_coordinates)

for i in range(1,size):

_ , point1=polar_coordinates[i-1]

_, point2 = polar_coordinates[i]

x1,y1=point1

x2,y2=point2

cv2.line(img_contours, (x1, y1), (x2, y2), 255, thickness=i)

_ , point1=polar_coordinates[size-1]

_, point2 = polar_coordinates[0]

x1,y1=point1

x2,y2=point2

cv2.line(img_contours, (x1, y1), (x2, y2), 255, thickness=size)

cv2.circle(img_contours, (int(xc), int(yc)), 7, (255,255,255), 2)

coses=[]

coses.append(get_cos_edges(get_coords(polar_coordinates[size-1],polar_coordinates[0],polar_coordinates[1])))

for i in range(1,size-1):

coses.append(get_cos_edges(get_coords(polar_coordinates[i-1], polar_coordinates[i],polar_coordinates[i+1])))

coses.append(get_cos_edges(get_coords(polar_coordinates[size-2], polar_coordinates[size-1],polar_coordinates[0])))

print(coses)

point=approx[beg_point]

x = float(point[0][0])

y = float(point[0][1])

cv2.circle(img_contours, (int(x), int(y)), 7, (255,255,255), 2)

cv2.imshow('origin', img) # выводим итоговое изображение в окно

cv2.imshow('res', img_contours) # выводим итоговое изображение в окно

cv2.waitKey()

cv2.destroyAllWindows()Using contour detection, we can detect the borders of objects, and localize them easily in an image. It is often the first step for many interesting applications, such as image-foreground extraction, simple-image segmentation, detection and recognition.

So let’s learn about contours and contour detection, using OpenCV, and see for ourselves how they can be used to build various applications.

Contents

- Application of Contours in Computer Vision

- What are contours?

- Steps for finding and drawing contours using OpenCV.

- Finding and drawing contours using OpenCV

- Drawing contours using CHAIN_APPROX_NONE

- Drawing contours using CHAIN_APPROX_SIMPLE.

- Contour hierarchies

- Parent-child relationship.

- Contour Relationship Representation

- Different contour retrieval techniques

- Summary

Application of Contours in Computer Vision

Some really cool applications have been built, using contours for motion detection or segmentation. Here are some examples:

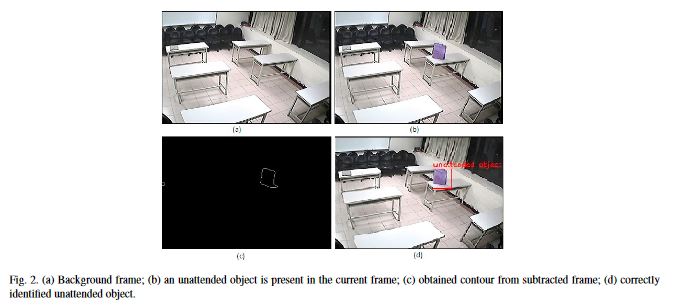

- Motion Detection: In surveillance video, motion detection technology has numerous applications, ranging from indoor and outdoor security environments, traffic control, behaviour detection during sports activities, detection of unattended objects, and even compression of video. In the figure below, see how detecting the movement of people in a video stream could be useful in a surveillance application. Notice how the group of people standing still in the left side of the image are not detected. Only those in motion are captured. Do refer to this paper to study this approach in detail.

- Unattended object detection: Any unattended object in public places is generally considered as a suspicious object. An effective and safe solution could be: (Unattended Object Detection through Contour Formation using Background Subtraction).

- Background / Foreground Segmentation: To replace the background of an image with another, you need to perform image-foreground extraction (similar to image segmentation). Using contours is one approach that can be used to perform segmentation. Refer to this post for more details. The following images show simple examples of such an application:

Master Generative AI for CV

Get expert guidance, insider tips & tricks. Create stunning images, learn to fine tune diffusion models, advanced Image editing techniques like In-Painting, Instruct Pix2Pix and many more

What are Contours

When we join all the points on the boundary of an object, we get a contour. Typically, a specific contour refers to boundary pixels that have the same color and intensity. OpenCV makes it really easy to find and draw contours in images. It provides two simple functions:

findContours()drawContours()

Also, it has two different algorithms for contour detection:

CHAIN_APPROX_SIMPLECHAIN_APPROX_NONE

We will cover these in detail, in the examples below. The following figure shows how these algorithms can detect the contours of simple objects.

Now that you have been introduced to contours, let’s discuss the steps involved in their detection.

Steps for Detecting and Drawing Contours in OpenCV

OpenCV makes this a fairly simple task. Just follow these steps:

- Read the Image and convert it to Grayscale Format

Read the image and convert the image to grayscale format. Converting the image to grayscale is very important as it prepares the image for the next step. Converting the image to a single channel grayscale image is important for thresholding, which in turn is necessary for the contour detection algorithm to work properly.

- Apply Binary Thresholding

While finding contours, first always apply binary thresholding or Canny edge detection to the grayscale image. Here, we will apply binary thresholding.

This converts the image to black and white, highlighting the objects-of-interest to make things easy for the contour-detection algorithm. Thresholding turns the border of the object in the image completely white, with all pixels having the same intensity. The algorithm can now detect the borders of the objects from these white pixels.

Note: The black pixels, having value 0, are perceived as background pixels and ignored.

At this point, one question may arise. What if we use single channels like R (red), G (green), or B (blue) instead of grayscale (thresholded) images? In such a case, the contour detection algorithm will not work well. As we discussed previously, the algorithm looks for borders, and similar intensity pixels to detect the contours. A binary image provides this information much better than a single (RGB) color channel image. In a later portion of the blog, we have resultant images when using only a single R, G, or B channel instead of grayscale and thresholded images.

- Find the Contours

Use the findContours() function to detect the contours in the image.

- Draw Contours on the Original RGB Image.

Once contours have been identified, use the drawContours() function to overlay the contours on the original RGB image.

The above steps will make much more sense, and become even clearer when we will start to code.

Finding and Drawing Contours using OpenCV

Start by importing OpenCV, and reading the input image.

Python:

import cv2

# read the image

image = cv2.imread('input/image_1.jpg')

Download Code

To easily follow along this tutorial, please download code by clicking on the button below. It’s FREE!

We assume that the image is inside the input folder of the current project directory. The next step is to convert the image into a grayscale image (single channel format).

Note: All the C++ code is contained within the main() function.

C++:

#include<opencv2/opencv.hpp>

#include <iostream>

using namespace std;

using namespace cv;

int main() {

// read the image

Mat image = imread("input/image_1.jpg");

Next, use the cvtColor() function to convert the original RGB image to a grayscale image.

Python:

# convert the image to grayscale format img_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

C++:

// convert the image to grayscale format Mat img_gray; cvtColor(image, img_gray, COLOR_BGR2GRAY);

Now, use the threshold() function to apply a binary threshold to the image. Any pixel with a value greater than 150 will be set to a value of 255 (white). All remaining pixels in the resulting image will be set to 0 (black). The threshold value of 150 is a tunable parameter, so you can experiment with it.

After thresholding, visualize the binary image, using the imshow() function as shown below.

Python:

# apply binary thresholding

ret, thresh = cv2.threshold(img_gray, 150, 255, cv2.THRESH_BINARY)

# visualize the binary image

cv2.imshow('Binary image', thresh)

cv2.waitKey(0)

cv2.imwrite('image_thres1.jpg', thresh)

cv2.destroyAllWindows()

C++:

// apply binary thresholding

Mat thresh;

threshold(img_gray, thresh, 150, 255, THRESH_BINARY);

imshow("Binary mage", thresh);

waitKey(0);

imwrite("image_thres1.jpg", thresh);

destroyAllWindows();

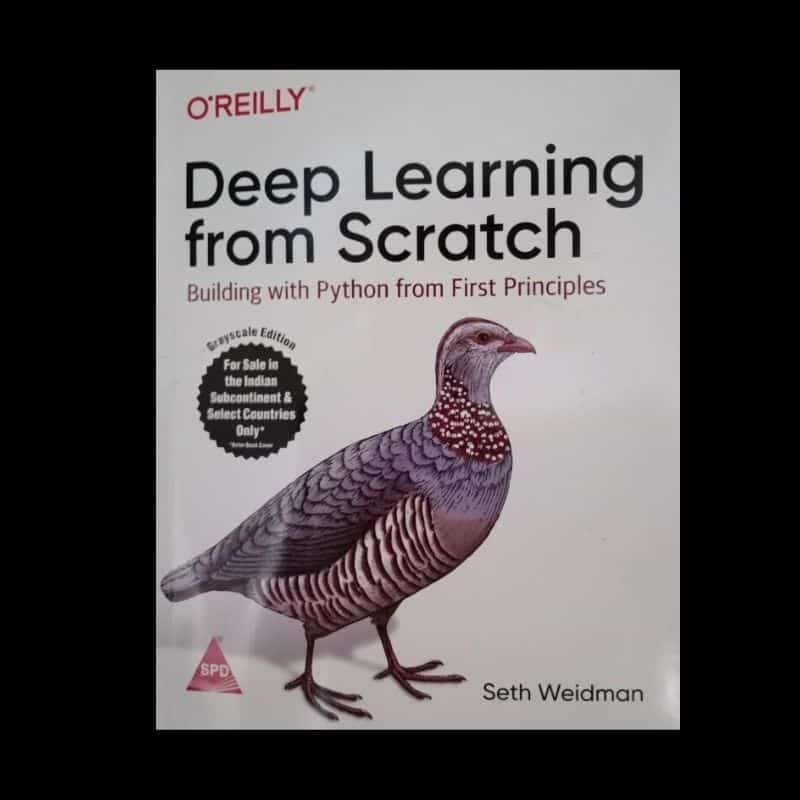

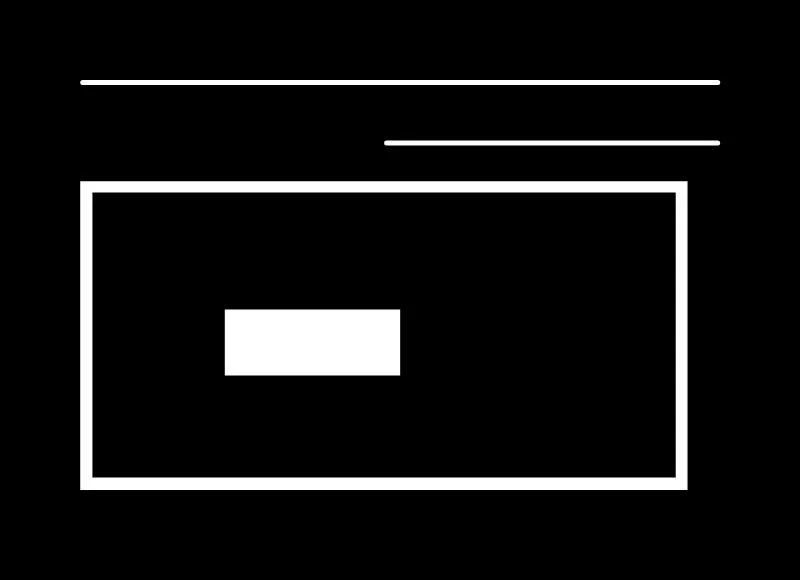

Check out the image below! It is a binary representation of the original RGB image. You can clearly see how the pen, the borders of the tablet and the phone are all white. The contour algorithm will consider these as objects, and find the contour points around the borders of these white objects.

Note how the background is completely black, including the backside of the phone. Such regions will be ignored by the algorithm. Taking the white pixels around the perimeter of each object as similar-intensity pixels, the algorithm will join them to form a contour based on a similarity measure.

Drawing Contours using CHAIN_APPROX_NONE

Now, let’s find and draw the contours, using the CHAIN_APPROX_NONE method.

Start with the findContours() function. It has three required arguments, as shown below. For optional arguments, please refer to the documentation page here.

image: The binary input image obtained in the previous step.mode: This is the contour-retrieval mode. We provided this asRETR_TREE, which means the algorithm will retrieve all possible contours from the binary image. More contour retrieval modes are available, we will be discussing them too. You can learn more details on these options here.method: This defines the contour-approximation method. In this example, we will useCHAIN_APPROX_NONE.Though slightly slower thanCHAIN_APPROX_SIMPLE, we will use this method here tol store ALL contour points.

It’s worth emphasizing here that mode refers to the type of contours that will be retrieved, while method refers to which points within a contour are stored. We will be discussing both in more detail below.

It is easy to visualize and understand results from different methods on the same image.

In the code samples below, we therefore make a copy of the original image and then demonstrate the methods (not wanting to edit the original).

Next, use the drawContours() function to overlay the contours on the RGB image. This function has four required and several optional arguments. The first four arguments below are required. For the optional arguments, please refer to the documentation page here.

image: This is the input RGB image on which you want to draw the contour.contours: Indicates thecontoursobtained from thefindContours()function.contourIdx: The pixel coordinates of the contour points are listed in the obtained contours. Using this argument, you can specify the index position from this list, indicating exactly which contour point you want to draw. Providing a negative value will draw all the contour points.color: This indicates the color of the contour points you want to draw. We are drawing the points in green.thickness: This is the thickness of contour points.

Python:

# detect the contours on the binary image using cv2.CHAIN_APPROX_NONE

contours, hierarchy = cv2.findContours(image=thresh, mode=cv2.RETR_TREE, method=cv2.CHAIN_APPROX_NONE)

# draw contours on the original image

image_copy = image.copy()

cv2.drawContours(image=image_copy, contours=contours, contourIdx=-1, color=(0, 255, 0), thickness=2, lineType=cv2.LINE_AA)

# see the results

cv2.imshow('None approximation', image_copy)

cv2.waitKey(0)

cv2.imwrite('contours_none_image1.jpg', image_copy)

cv2.destroyAllWindows()

C++:

// detect the contours on the binary image using cv2.CHAIN_APPROX_NONE

vector<vector<Point>> contours;

vector<Vec4i> hierarchy;

findContours(thresh, contours, hierarchy, RETR_TREE, CHAIN_APPROX_NONE);

// draw contours on the original image

Mat image_copy = image.clone();

drawContours(image_copy, contours, -1, Scalar(0, 255, 0), 2);

imshow("None approximation", image_copy);

waitKey(0);

imwrite("contours_none_image1.jpg", image_copy);

destroyAllWindows();

Executing the above code will produce and display the image shown below. We also save the image to disk.

The following figure shows the original image (on the left), as well as the original image with the contours overlaid (on the right).

As you can see in the above figure, the contours produced by the algorithm do a nice job of identifying the boundary of each object. However, if you look closely at the phone, you will find that it contains more than one contour. Separate contours have been identified for the circular areas associated with the camera lens and light. There are also ‘secondary’ contours, along portions of the edge of the phone.

Keep in mind that the accuracy and quality of the contour algorithm is heavily dependent on the quality of the binary image that is supplied (look at the binary image in the previous section again, you can see the lines associated with these secondary contours). Some applications require high quality contours. In such cases, experiment with different thresholds when creating the binary image, and see if that improves the resulting contours.

There are other approaches that can be used to eliminate unwanted contours from the binary maps prior to contour generation. You can also use more advanced features associated with the contour algorithm that we will be discussing here.

Using Single Channel: Red, Green, or Blue

Just to get an idea, the following are some results when using red, green and blue channels separately, while detecting contours. We discussed this in the contour detection steps previously. The following are the Python and C++ code for the same image as above.

Python:

import cv2

# read the image

image = cv2.imread('input/image_1.jpg')

# B, G, R channel splitting

blue, green, red = cv2.split(image)

# detect contours using blue channel and without thresholding

contours1, hierarchy1 = cv2.findContours(image=blue, mode=cv2.RETR_TREE, method=cv2.CHAIN_APPROX_NONE)

# draw contours on the original image

image_contour_blue = image.copy()

cv2.drawContours(image=image_contour_blue, contours=contours1, contourIdx=-1, color=(0, 255, 0), thickness=2, lineType=cv2.LINE_AA)

# see the results

cv2.imshow('Contour detection using blue channels only', image_contour_blue)

cv2.waitKey(0)

cv2.imwrite('blue_channel.jpg', image_contour_blue)

cv2.destroyAllWindows()

# detect contours using green channel and without thresholding

contours2, hierarchy2 = cv2.findContours(image=green, mode=cv2.RETR_TREE, method=cv2.CHAIN_APPROX_NONE)

# draw contours on the original image

image_contour_green = image.copy()

cv2.drawContours(image=image_contour_green, contours=contours2, contourIdx=-1, color=(0, 255, 0), thickness=2, lineType=cv2.LINE_AA)

# see the results

cv2.imshow('Contour detection using green channels only', image_contour_green)

cv2.waitKey(0)

cv2.imwrite('green_channel.jpg', image_contour_green)

cv2.destroyAllWindows()

# detect contours using red channel and without thresholding

contours3, hierarchy3 = cv2.findContours(image=red, mode=cv2.RETR_TREE, method=cv2.CHAIN_APPROX_NONE)

# draw contours on the original image

image_contour_red = image.copy()

cv2.drawContours(image=image_contour_red, contours=contours3, contourIdx=-1, color=(0, 255, 0), thickness=2, lineType=cv2.LINE_AA)

# see the results

cv2.imshow('Contour detection using red channels only', image_contour_red)

cv2.waitKey(0)

cv2.imwrite('red_channel.jpg', image_contour_red)

cv2.destroyAllWindows()

C++:

#include<opencv2/opencv.hpp>

#include <iostream>

using namespace std;

using namespace cv;

int main() {

// read the image

Mat image = imread("input/image_1.jpg");

// B, G, R channel splitting

Mat channels[3];

split(image, channels);

// detect contours using blue channel and without thresholding

vector<vector<Point>> contours1;

vector<Vec4i> hierarchy1;

findContours(channels[0], contours1, hierarchy1, RETR_TREE, CHAIN_APPROX_NONE);

// draw contours on the original image

Mat image_contour_blue = image.clone();

drawContours(image_contour_blue, contours1, -1, Scalar(0, 255, 0), 2);

imshow("Contour detection using blue channels only", image_contour_blue);

waitKey(0);

imwrite("blue_channel.jpg", image_contour_blue);

destroyAllWindows();

// detect contours using green channel and without thresholding

vector<vector<Point>> contours2;

vector<Vec4i> hierarchy2;

findContours(channels[1], contours2, hierarchy2, RETR_TREE, CHAIN_APPROX_NONE);

// draw contours on the original image

Mat image_contour_green = image.clone();

drawContours(image_contour_green, contours2, -1, Scalar(0, 255, 0), 2);

imshow("Contour detection using green channels only", image_contour_green);

waitKey(0);

imwrite("green_channel.jpg", image_contour_green);

destroyAllWindows();

// detect contours using red channel and without thresholding

vector<vector<Point>> contours3;

vector<Vec4i> hierarchy3;

findContours(channels[2], contours3, hierarchy3, RETR_TREE, CHAIN_APPROX_NONE);

// draw contours on the original image

Mat image_contour_red = image.clone();

drawContours(image_contour_red, contours3, -1, Scalar(0, 255, 0), 2);

imshow("Contour detection using red channels only", image_contour_red);

waitKey(0);

imwrite("red_channel.jpg", image_contour_red);

destroyAllWindows();

}

The following figure shows the contour detection results for all the three separate color channels.

In the above image we can see that the contour detection algorithm is not able to find the contours properly. This is because it is not able to detect the borders of the objects properly, and also the intensity difference between the pixels is not well defined. This is the reason we prefer to use a grayscale, and binary thresholded image for detecting contours.

Drawing Contours using CHAIN_APPROX_SIMPLE

Let’s find out now how the CHAIN_APPROX_SIMPLE algorithm works and what makes it different from the CHAIN_APPROX_NONE algorithm.

Here’s the code for it:

Python:

"""

Now let's try with `cv2.CHAIN_APPROX_SIMPLE`

"""

# detect the contours on the binary image using cv2.ChAIN_APPROX_SIMPLE

contours1, hierarchy1 = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

# draw contours on the original image for `CHAIN_APPROX_SIMPLE`

image_copy1 = image.copy()

cv2.drawContours(image_copy1, contours1, -1, (0, 255, 0), 2, cv2.LINE_AA)

# see the results

cv2.imshow('Simple approximation', image_copy1)

cv2.waitKey(0)

cv2.imwrite('contours_simple_image1.jpg', image_copy1)

cv2.destroyAllWindows()

C++:

// Now let us try with CHAIN_APPROX_SIMPLE`

// detect the contours on the binary image using cv2.CHAIN_APPROX_NONE

vector<vector<Point>> contours1;

vector<Vec4i> hierarchy1;

findContours(thresh, contours1, hierarchy1, RETR_TREE, CHAIN_APPROX_SIMPLE);

// draw contours on the original image

Mat image_copy1 = image.clone();

drawContours(image_copy1, contours1, -1, Scalar(0, 255, 0), 2);

imshow("Simple approximation", image_copy1);

waitKey(0);

imwrite("contours_simple_image1.jpg", image_copy1);

destroyAllWindows();

The only difference here is that we specify the method for findContours() as CHAIN_APPROX_SIMPLE instead of CHAIN_APPROX_NONE.

The CHAIN_APPROX_SIMPLE algorithm compresses horizontal, vertical, and diagonal segments along the contour and leaves only their end points. This means that any of the points along the straight paths will be dismissed, and we will be left with only the end points. For example, consider a contour, along a rectangle. All the contour points, except the four corner points will be dismissed. This method is faster than the CHAIN_APPROX_NONE because the algorithm does not store all the points, uses less memory, and therefore, takes less time to execute.

The following image shows the results.

If you observe closely, there are almost no differences between the outputs of CHAIN_APPROX_NONE and CHAIN_APPROX_SIMPLE.

Now, why is that?

The credit goes to the drawContours() function. Although the CHAIN_APPROX_SIMPLE method typically results in fewer points, the drawContours() function automatically connects adjacent points, joining them even if they are not in the contours list.

So, how do we confirm that the CHAIN_APPROX_SIMPLE algorithm is actually working?

- The most straightforward way is to loop over the contour points manually, and draw a circle on the detected contour coordinates, using OpenCV.

- Also, we use a different image that will actually help us visualize the results of the algorithm.

The following code uses the above image to visualize the effect of the CHAIN_APPROX_SIMPLE algorithm. Almost everything is the same as in the previous code example, except the two additional for loops and some variable names.

- The first

forloop cycles over each contour area present in thecontourslist. - The second loops over each of the coordinates in that area.

- We then draw a green circle on each coordinate point, using the

circle()function from OpenCV. - Finally, we visualize the results and save it to disk.

Python:

# to actually visualize the effect of `CHAIN_APPROX_SIMPLE`, we need a proper image

image1 = cv2.imread('input/image_2.jpg')

img_gray1 = cv2.cvtColor(image1, cv2.COLOR_BGR2GRAY)

ret, thresh1 = cv2.threshold(img_gray1, 150, 255, cv2.THRESH_BINARY)

contours2, hierarchy2 = cv2.findContours(thresh1, cv2.RETR_TREE,

cv2.CHAIN_APPROX_SIMPLE)

image_copy2 = image1.copy()

cv2.drawContours(image_copy2, contours2, -1, (0, 255, 0), 2, cv2.LINE_AA)

cv2.imshow('SIMPLE Approximation contours', image_copy2)

cv2.waitKey(0)

image_copy3 = image1.copy()

for i, contour in enumerate(contours2): # loop over one contour area

for j, contour_point in enumerate(contour): # loop over the points

# draw a circle on the current contour coordinate

cv2.circle(image_copy3, ((contour_point[0][0], contour_point[0][1])), 2, (0, 255, 0), 2, cv2.LINE_AA)

# see the results

cv2.imshow('CHAIN_APPROX_SIMPLE Point only', image_copy3)

cv2.waitKey(0)

cv2.imwrite('contour_point_simple.jpg', image_copy3)

cv2.destroyAllWindows()

C++:

// using a proper image for visualizing CHAIN_APPROX_SIMPLE

Mat image1 = imread("input/image_2.jpg");

Mat img_gray1;

cvtColor(image1, img_gray1, COLOR_BGR2GRAY);

Mat thresh1;

threshold(img_gray1, thresh1, 150, 255, THRESH_BINARY);

vector<vector<Point>> contours2;

vector<Vec4i> hierarchy2;

findContours(thresh1, contours2, hierarchy2, RETR_TREE, CHAIN_APPROX_NONE);

Mat image_copy2 = image1.clone();

drawContours(image_copy2, contours2, -1, Scalar(0, 255, 0), 2);

imshow("None approximation", image_copy2);

waitKey(0);

imwrite("contours_none_image1.jpg", image_copy2);

destroyAllWindows();

Mat image_copy3 = image1.clone();

for(int i=0; i<contours2.size(); i=i+1){

for (int j=0; j<contours2[i].size(); j=j+1){

circle(image_copy3, (contours2[i][0], contours2[i][1]), 2, Scalar(0, 255, 0), 2);

}

}

imshow("CHAIN_APPROX_SIMPLE Point only", image_copy3);

waitKey(0);

imwrite("contour_point_simple.jpg", image_copy3);

destroyAllWindows();

Executing the code above, produces the following result:

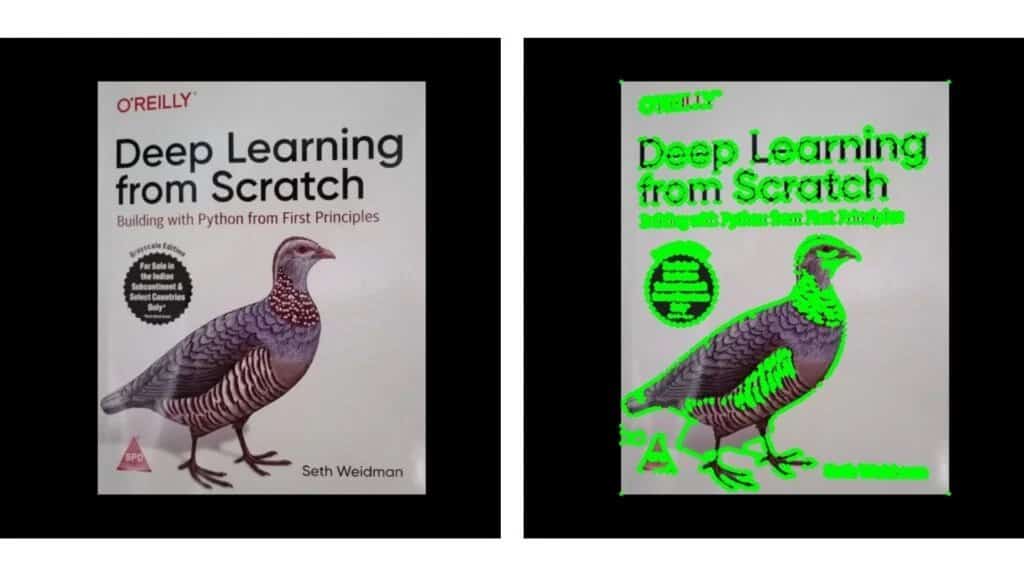

Observe the output image, which is on the right-hand side in the above figure. Note that the vertical and horizontal sides of the book contain only four points at the corners of the book. Also observe that the letters and bird are indicated with discrete points and not line segments.

Contour Hierarchies

Hierarchies denote the parent-child relationship between contours. You will see how each contour-retrieval mode affects contour detection in images, and produces hierarchical results.

Parent-Child Relationship

Objects detected by contour-detection algorithms in an image could be:

- Single objects scattered around in an image (as in the first example), or

- Objects and shapes inside one another

In most cases, where a shape contains more shapes, we can safely conclude that the outer shape is a parent of the inner shape.

Take a look at the following figure, it contains several simple shapes that will help demonstrate contour hierarchies.

Now see below figure, where the contours associated with each shape in Figure 10 have been identified. Each of the numbers in Figure 11 have a significance.

- All the individual numbers, i.e., 1, 2, 3, and 4 are separate objects, according to the contour hierarchy and parent-child relationship.

- We can say that the 3a is a child of 3. Note that 3a represents the interior portion of contour 3.

- Contours 1, 2, and 4 are all parent shapes, without any associated child, and their numbering is thus arbitrary. In other words, contour 2 could have been labeled as 1 and vice-versa.

Contour Relationship Representation

You’ve seen that the findContours() function returns two outputs: The contours list, and the hierarchy. Let’s now understand the contour hierarchy output in detail.

The contour hierarchy is represented as an array, which in turn contains arrays of four values. It is represented as:

[Next, Previous, First_Child, Parent]

So, what do all these values mean?

Next: Denotes the next contour in an image, which is at the same hierarchical level. So,

- For contour 1, the next contour at the same hierarchical level is 2. Here,

Nextwill be 2. - Accordingly, contour 3 has no contour at the same hierarchical level as itself. So, it’s

Nextvalue will be -1.

Previous: Denotes the previous contour at the same hierarchical level. This means that contour 1 will always have its Previous value as -1.

First_Child: Denotes the first child contour of the contour we are currently considering.

- Contours 1 and 2 have no children at all. So, the index values for their

First_Childwill be -1. - But contour 3 has a child. So, for contour 3, the

First_Childposition value will be the index position of 3a.

Parent: Denotes the parent contour’s index position for the current contour.

- Contours 1 and 2, as is obvious, do not have any

Parentcontour. - For the contour 3a, its

Parentis going to be contour 3 - For contour 4, the parent is contour 3a

The above explanations make sense, but how do we actually visualize these hierarchy arrays? The best way is to:

- Use a simple image with lines and shapes like the previous image

- Detect the contours and hierarchies, using different retrieval modes

- Then print the values to visualize them

Different Contour Retrieval Techniques

Thus far, we used one specific retrieval technique, RETR_TREE to find and draw contours, but there are three more contour-retrieval techniques in OpenCV, namely, RETR_LIST, RETR_EXTERNAL and RETR_CCOMP.

So let’s now use the image in Figure 10 to review each of these four methods, along with their associated code to get the contours.

The following code reads the image from disk, converts it to grayscale, and applies binary thresholding.

Python:

"""

Contour detection and drawing using different extraction modes to complement

the understanding of hierarchies

"""

image2 = cv2.imread('input/custom_colors.jpg')

img_gray2 = cv2.cvtColor(image2, cv2.COLOR_BGR2GRAY)

ret, thresh2 = cv2.threshold(img_gray2, 150, 255, cv2.THRESH_BINARY)

C++:

/*

Contour detection and drawing using different extraction modes to complement the understanding of hierarchies

*/

Mat image2 = imread("input/custom_colors.jpg");

Mat img_gray2;

cvtColor(image2, img_gray2, COLOR_BGR2GRAY);

Mat thresh2;

threshold(img_gray2, thresh2, 150, 255, THRESH_BINARY);

RETR_LIST

The RETR_LIST contour retrieval method does not create any parent child relationship between the extracted contours. So, for all the contour areas that are detected, the First_Child and Parent index position values are always -1.

All the contours will have their corresponding Previous and Next contours as discussed above.

See how the RETR_LIST method is implemented in code.

Python:

contours3, hierarchy3 = cv2.findContours(thresh2, cv2.RETR_LIST, cv2.CHAIN_APPROX_NONE)

image_copy4 = image2.copy()

cv2.drawContours(image_copy4, contours3, -1, (0, 255, 0), 2, cv2.LINE_AA)

# see the results

cv2.imshow('LIST', image_copy4)

print(f"LIST: {hierarchy3}")

cv2.waitKey(0)

cv2.imwrite('contours_retr_list.jpg', image_copy4)

cv2.destroyAllWindows()

C++:

vector<vector<Point>> contours3;

vector<Vec4i> hierarchy3;

findContours(thresh2, contours3, hierarchy3, RETR_LIST, CHAIN_APPROX_NONE);

Mat image_copy4 = image2.clone();

drawContours(image_copy4, contours3, -1, Scalar(0, 255, 0), 2);

imshow("LIST", image_copy4);

waitKey(0);

imwrite("contours_retr_list.jpg", image_copy4);

destroyAllWindows();

Executing the above code produces the following output:

LIST: [[[ 1 -1 -1 -1]

[ 2 0 -1 -1]

[ 3 1 -1 -1]

[ 4 2 -1 -1]

[-1 3 -1 -1]]]

You can clearly see that the 3rd and 4th index positions of all the detected contour areas are -1, as expected.

RETR_EXTERNAL

The RETR_EXTERNAL contour retrieval method is a really interesting one. It only detects the parent contours, and ignores any child contours. So, all the inner contours like 3a and 4 will not have any points drawn on them.

Python:

contours4, hierarchy4 = cv2.findContours(thresh2, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

image_copy5 = image2.copy()

cv2.drawContours(image_copy5, contours4, -1, (0, 255, 0), 2, cv2.LINE_AA)

# see the results

cv2.imshow('EXTERNAL', image_copy5)

print(f"EXTERNAL: {hierarchy4}")

cv2.waitKey(0)

cv2.imwrite('contours_retr_external.jpg', image_copy5)

cv2.destroyAllWindows()

C++:

vector<vector<Point>> contours4;

vector<Vec4i> hierarchy4;

findContours(thresh2, contours4, hierarchy4, RETR_EXTERNAL, CHAIN_APPROX_NONE);

Mat image_copy5 = image2.clone();

drawContours(image_copy5, contours4, -1, Scalar(0, 255, 0), 2);

imshow("EXTERNAL", image_copy5);

waitKey(0);

imwrite("contours_retr_external.jpg", image_copy4);

destroyAllWindows();

The above code produces the following output:

EXTERNAL: [[[ 1 -1 -1 -1]

[ 2 0 -1 -1]

[-1 1 -1 -1]]]

The above output image shows only the points drawn on contours 1, 2, and 3. Contours 3a and 4 are omitted as they are child contours.

RETR_CCOMP

Unlike RETR_EXTERNAL,RETR_CCOMP retrieves all the contours in an image. Along with that, it also applies a 2-level hierarchy to all the shapes or objects in the image.

This means:

- All the outer contours will have hierarchy level 1

- All the inner contours will have hierarchy level 2

But what if we have a contour inside another contour with hierarchy level 2? Just like we have contour 4 after contour 3a.

In that case:

- Again, contour 4 will have hierarchy level 1.

- If there are any contours inside contour 4, they will have hierarchy level 2.

In the following image, the contours have been numbered according to their hierarchy level, as explained above.

The above image shows the hierarchy level as HL-1 or HL-2 for levels 1 and 2 respectively. Now, let us take a look at the code and the output hierarchy array also.

Python:

contours5, hierarchy5 = cv2.findContours(thresh2, cv2.RETR_CCOMP, cv2.CHAIN_APPROX_NONE)

image_copy6 = image2.copy()

cv2.drawContours(image_copy6, contours5, -1, (0, 255, 0), 2, cv2.LINE_AA)

# see the results

cv2.imshow('CCOMP', image_copy6)

print(f"CCOMP: {hierarchy5}")

cv2.waitKey(0)

cv2.imwrite('contours_retr_ccomp.jpg', image_copy6)

cv2.destroyAllWindows()

C++:

vector<vector<Point>> contours5;

vector<Vec4i> hierarchy5;

findContours(thresh2, contours5, hierarchy5, RETR_CCOMP, CHAIN_APPROX_NONE);

Mat image_copy6 = image2.clone();

drawContours(image_copy6, contours5, -1, Scalar(0, 255, 0), 2);

imshow("EXTERNAL", image_copy6);

// cout << "EXTERNAL:" << hierarchy5;

waitKey(0);

imwrite("contours_retr_ccomp.jpg", image_copy6);

destroyAllWindows();

Executing the above code produces the following output:

CCOMP: [[[ 1 -1 -1 -1]

[ 3 0 2 -1]

[-1 -1 -1 1]

[ 4 1 -1 -1]

[-1 3 -1 -1]]]

Here, we see that all the Next, Previous, First_Child, and Parent relationships are maintained, according to the contour-retrieval method, as all the contours are detected. As expected, the Previous of the first contour area is -1. And the contours which do not have any Parent, also have the value -1

RETR_TREE

Just like RETR_CCOMP, RETR_TREE also retrieves all the contours. It also creates a complete hierarchy, with the levels not restricted to 1 or 2. Each contour can have its own hierarchy, in line with the level it is on, and the corresponding parent-child relationship that it has.

From the above figure, it is clear that:

- Contours 1, 2, and 3 are at the same level, that is level 0.

- Contour 3a is present at hierarchy level 1, as it is a child of contour 3.

- Contour 4 is a new contour area, so its hierarchy level is 2.

The following code uses RETR_TREE mode to retrieve contours.

Python:

contours6, hierarchy6 = cv2.findContours(thresh2, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

image_copy7 = image2.copy()

cv2.drawContours(image_copy7, contours6, -1, (0, 255, 0), 2, cv2.LINE_AA)

# see the results

cv2.imshow('TREE', image_copy7)

print(f"TREE: {hierarchy6}")

cv2.waitKey(0)

cv2.imwrite('contours_retr_tree.jpg', image_copy7)

cv2.destroyAllWindows()

C++:

vector<vector<Point>> contours6;

vector<Vec4i> hierarchy6;

findContours(thresh2, contours6, hierarchy6, RETR_TREE, CHAIN_APPROX_NONE);

Mat image_copy7 = image2.clone();

drawContours(image_copy7, contours6, -1, Scalar(0, 255, 0), 2);

imshow("EXTERNAL", image_copy7);

// cout << "EXTERNAL:" << hierarchy6;

waitKey(0);

imwrite("contours_retr_tree.jpg", image_copy7);

destroyAllWindows();

Executing the above code produces the following output:

TREE: [[[ 3 -1 1 -1]

[-1 -1 2 0]

[-1 -1 -1 1]

[ 4 0 -1 -1]

[-1 3 -1 -1]]]

Finally, let’s look at the complete image with all the contours drawn when using RETR_TREE mode.

All the contours are drawn as expected, and the contour areas are clearly visible. You also infer that contours 3 and 3a are two separate contours, as they have different contour boundaries and areas. At the same time, it is very evident that contour 3a is a child of contour 3.

Now that you are familiar with all the contour algorithms available in OpenCV, along with their respective input parameters and configurations, go experiment and see for yourself how they work.

A Run Time Comparison of Different Contour Retrieval Methods

It’s not enough to know the contour-retrieval methods. You should also be aware of their relative processing time. The following table compares the runtime for each method discussed above.

| Contour Retrieval Method | Time Take (in seconds) |

| RETR_LIST | 0.000382 |

| RETR_EXTERNAL | 0.000554 |

| RETR_CCOMP | 0.001845 |

| RETR_TREE | 0.005594 |

Some interesting conclusions emerge from the above table:

RETR_LISTandRETR_EXTERNALtake the least amount of time to execute, sinceRETR_LISTdoes not define any hierarchy andRETR_EXTERNALonly retrieves the parent contoursRETR_CCOMPtakes the second highest time to execute. It retrieves all the contours and defines a two-level hierarchy.RETR_TREEtakes the maximum time to execute for it retrieves all the contours, and defines the independent hierarchy level for each parent-child relationship as well.

Although the above times may not seem significant, it is important to be aware of the differences for applications that may require a significant amount of contour processing. It is also worth noting that this processing time may vary, depending to an extent on the contours they extract, and the hierarchy levels they define.

Limitations

So far, all the examples we explored seemed interesting, and their results encouraging. However, there are cases where the contour algorithm might fail to deliver meaningful and useful results. Let’s consider such an example too.

- When the objects in an image are strongly contrasted against their background, you can clearly identify the contours associated with each object. But what if you have an image, like Figure 16 below. It not only has a bright object (puppy), but also a background cluttered with the same value (brightness) as the object of interest (puppy). You find that the contours in the right-hand image are not even complete. Also, there are multiple unwanted contours standing out in the background area.

- Contour detection can also fail, when the background of the object in the image is full of lines.

Taking Your Learning Further

If you think that you have learned something interesting in this article and would like to expand your knowledge, then you may like the Computer Vision 1 course offered by OpenCV. This is a great course to get started with OpenCV and Computer Vision which will be very hands-on and perfect to get you started and up to speed with OpenCV. The best part, you can take it in either Python or C++, whichever you choose. You can visit the course page here to know more about it.

Summary

You started with contour detection, and learned to implement that in OpenCV. Saw how applications use contours for mobility detection and segmentation. Next, we demonstrated the use of four different retrieval modes and two contour-approximation methods. You also learned to draw contours. We concluded with a discussion of contour hierarchies, and how different contour-retrieval modes affect the drawing of contours on an image.

Key takeaways:

- The contour-detection algorithms in OpenCV work very well, when the image has a dark background and a well-defined object-of-interest.

- But when the background of the input image is cluttered or has the same pixel intensity as the object-of-interest, the algorithms don’t fare so well.

You have all the code here, why not experiment with different images now! Try images containing varied shapes, and experiment with different threshold values. Also, explore different retrieval modes, using test images that contain nested contours. This way, you can fully appreciate the hierarchical relationships between objects.

Subscribe & Download Code

If you liked this article and would like to download code (C++ and Python) and example images used in this post, please click here. Alternately, sign up to receive a free Computer Vision Resource Guide. In our newsletter, we share OpenCV tutorials and examples written in C++/Python, and Computer Vision and Machine Learning algorithms and news.

Improve Article

Save Article

Like Article

Improve Article

Save Article

Like Article

Contours are defined as the line joining all the points along the boundary of an image that are having the same intensity. Contours come handy in shape analysis, finding the size of the object of interest, and object detection.

OpenCV has findContour() function that helps in extracting the contours from the image. It works best on binary images, so we should first apply thresholding techniques, Sobel edges, etc.

Below is the code for finding contours –

import cv2

import numpy as np

cv2.waitKey(0)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

edged = cv2.Canny(gray, 30, 200)

cv2.waitKey(0)

contours, hierarchy = cv2.findContours(edged,

cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

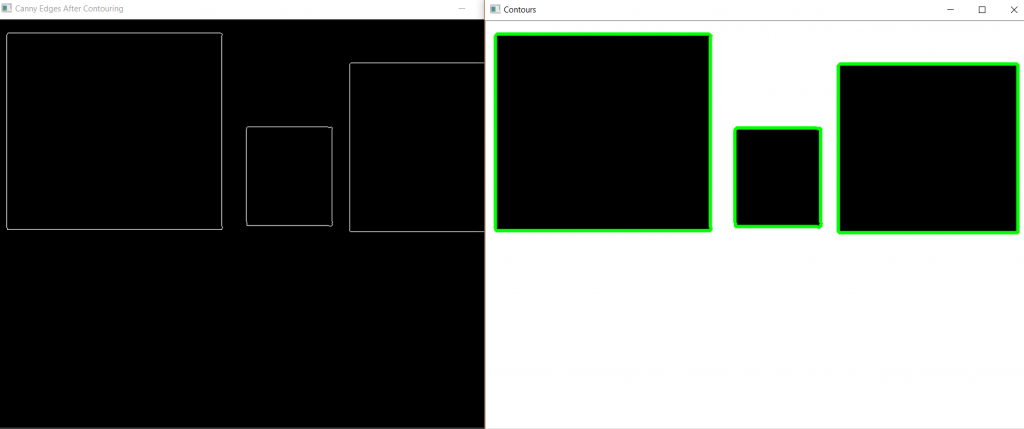

cv2.imshow('Canny Edges After Contouring', edged)

cv2.waitKey(0)

print("Number of Contours found = " + str(len(contours)))

cv2.drawContours(image, contours, -1, (0, 255, 0), 3)

cv2.imshow('Contours', image)

cv2.waitKey(0)

cv2.destroyAllWindows()

Output:

We see that there are three essential arguments in cv2.findContours() function. First one is source image, second is contour retrieval mode, third is contour approximation method and it outputs the image, contours, and hierarchy. ‘contours‘ is a Python list of all the contours in the image. Each individual contour is a Numpy array of (x, y) coordinates of boundary points of the object.

Contours Approximation Method –

Above, we see that contours are the boundaries of a shape with the same intensity. It stores the (x, y) coordinates of the boundary of a shape. But does it store all the coordinates? That is specified by this contour approximation method.

If we pass cv2.CHAIN_APPROX_NONE, all the boundary points are stored. But actually, do we need all the points? For eg, if we have to find the contour of a straight line. We need just two endpoints of that line. This is what cv2.CHAIN_APPROX_SIMPLE does. It removes all redundant points and compresses the contour, thereby saving memory.

Last Updated :

04 Jan, 2023

Like Article

Save Article

In this tutorial, we are going to see another image processing technique: detect edges and contours in an image.

Edge detection is a fundamental task in computer vision. It can be defined as the task of finding boundaries between regions that have different properties, such as brightness or texture.

Simply put, edge detection is the process of locating edges in an image. An edge is typically an abrupt transition from a pixel value of one color to another, such as from black to white.

This article is part 11 of the tutorial series on computer vision and image processing with OpenCV:

- How to Read, Write, and Save Images with OpenCV and Python

- How to Read and Write Videos with OpenCV and Python

- How to Resize Images with OpenCV and Python

- How to Crop Images with OpenCV and Python

- How to Rotate Images with OpenCV and Python

- How to Annotate Images with OpenCV and Python (coming soon)

- Bitwise Operations and Image Masking with OpenCV and Python

- Image Filtering and Blurring with OpenCV and Python

- Image Thresholding with OpenCV and Python

- Morphological Operations with OpenCV and Python

- Edge and Contour Detection with OpenCV and Python (this article)

Canny Edge Detector

The canny edge detector is a multi-stage algorithm for detecting edges in an image. It was created by John F. Canny in 1986 and published in the paper «A computational approach to edge detection». It is one of the most popular techniques for edge detection, not just because of its simplicity, but also because it generates high-quality results.

The Canny edge detector algorithm has four steps:

- Noise reduction by blurring the image using a Gaussian blur.

- Computing the intensity gradients of the image.

- Suppression of Edges.

- Using hysteresis thresholding.

Read the paper above if you want to learn how the algorithm works. we will not go into the theory and the mathematics behind this algorithm, instead, we will write some code to see how to use it and how it works.

So let’s get started.

import cv2

image = cv2.imread("objects.jpg")

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blurred = cv2.GaussianBlur(gray, (3, 3), 0)

edged = cv2.Canny(blurred, 10, 100)

cv2.imshow("Original image", image)

cv2.imshow("Edged image", edged)

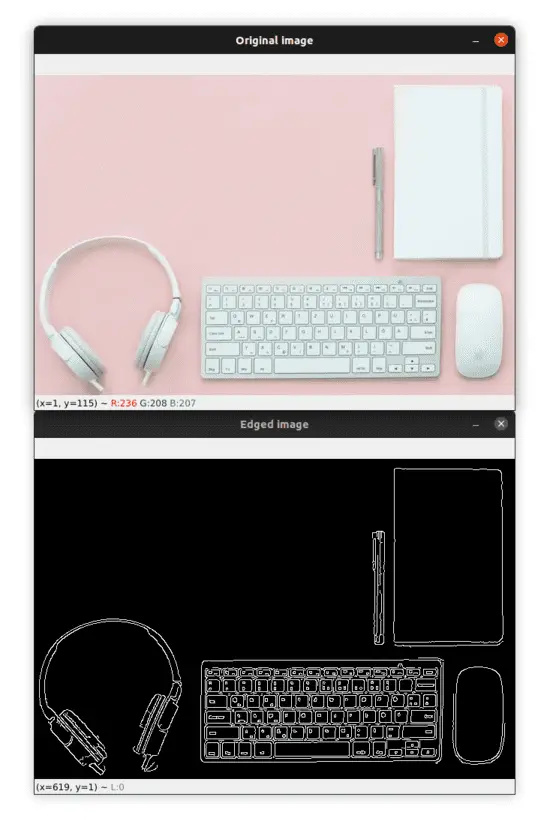

cv2.waitKey(0)We start by loading our image, converting it to grayscale, and applying the cv2.GaussianBlur to blur the image and remove noise.

Next, we apply the Canny edge detector using the cv2.canny function. This function takes 3 required parameters and 3 optional parameters. In our case, we only used the required parameters.

The first argument is the image on which we want to detect the edges. The second and third arguments are the thresholds used for the hysteresis procedure.

The output image is shown below:

As you can see, the algorithm has found the most important edges on the image. Try using different values for the thresholds parameters to see how this will influence the edge detection.

Now let’s move on to contour detection!

Contour Detection

Contours are the basic building blocks for computer vision. They are what allow computers to detect general shapes and sizes of objects that are in an image so that they can be classified, segmented, and identified.

Using OpenCV, we can find the contours by following these steps:

- Convert the image into a binary image. We can use thresholding or edge detection. We will be using the Canny edge detector.

- Find the contours using the cv2.findContours function.

- Draw the contours on the image using the cv2.drawContours function.

We already converted our image into a binary image in the previous section using the Canny edge detector, we just have to find the contours and draw them in the image.

Let’s see how to do it:

# find the contours in the edged image

contours, _ = cv2.findContours(edged, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

image_copy = image.copy()

# draw the contours on a copy of the original image

cv2.drawContours(image_copy, contours, -1, (0, 255, 0), 2)

print(len(contours), "objects were found in this image.")

cv2.imshow("Edged image", edged)

cv2.imshow("contours", image_copy)

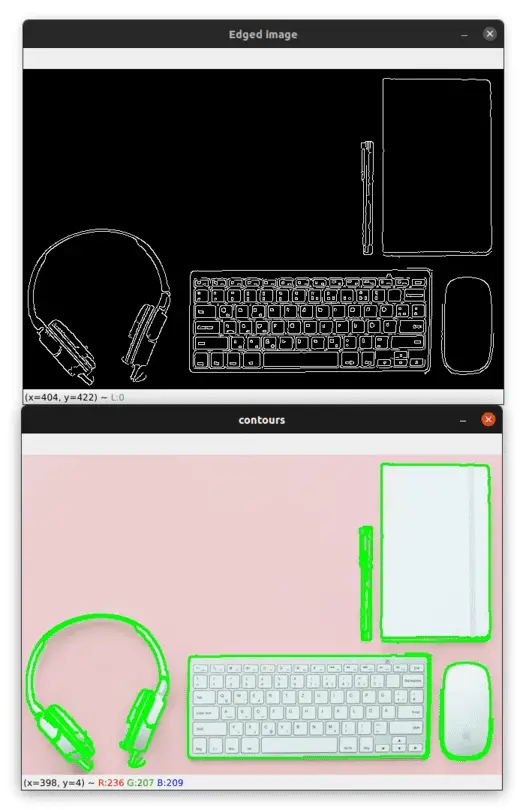

cv2.waitKey(0)We used the binary image we got from the Canny edge detector to find the contours of the objects. We find the contours by calling the cv2.findContours function. This function takes 3 required arguments and 3 optional arguments.

Here we only used the required parameters. The first argument is the binary image. Please note that since OpenCV 3.2 the source image is not modified by this function, so we don’t need to pass a copy of the image to this function, we can simply pass the original image.

The second argument is the contour retrieval mode. By using cv2.RETR_EXTERNAL we only retrieve the outer contours of the objects on the image. See RetrievalModes for other possible options.

The third argument to this function is the contour approximation method. In our case we used cv2.CHAIN_APPROX_SIMPLE, which will compress horizontal, vertical, and diagonal segments to keep only their end points. See ContourApproximationModes for the possible options.

The function then returns a tuple with two elements (this is the case for OpenCV v4). The first element is the contours detected on the image and the second element is the hierarchy of the contours.

Next, we make a copy of the original image which we will use to draw the contours on it. Drawing the contours is performed using the cv2.drawContours function.

The first argument to this function is the image on which we want to draw the contours. Again, we have drawn the contours on a copy of the image because we don’t want to alter the original image.

The second argument is the contours and the third argument is the index of the contour to draw, using a negative value will draw all the contours.

The fourth argument is the color of the contours (in our case it is a green color) and the last argument is the thickness of the lines of the contours.

You can see the result of this operation in the image below:

As you can see, the algorithm identified all the boundaries of the objects and also some contours inside the objects.

The contours variable is a list containing all the contours found by the algorithm, so we can use the built-in len() function to count the number of contours.

So if we want to count the number of objects in the image we need to detect only the contours of the boundaries of the objects.

In the previous example, if you print the number of contours you’ll see that the algorithm detected 14 contours in the image.

In order for the contour detection algorithm to only detects the boundaries of the objects and therefore the len() function returns us the number of objects in the image, we have to apply the dilation operation to the binary image (see my course to learn more).

Let’s see if morphological operations will help us to solve this issue:

image = cv2.imread("objects.jpg")

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blurred = cv2.GaussianBlur(gray, (3, 3), 0)

edged = cv2.Canny(blurred, 10, 100)

# define a (3, 3) structuring element

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3))

# apply the dilation operation to the edged image

dilate = cv2.dilate(edged, kernel, iterations=1)

# find the contours in the dilated image

contours, _ = cv2.findContours(dilate, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

image_copy = image.copy()

# draw the contours on a copy of the original image

cv2.drawContours(image_copy, contours, -1, (0, 255, 0), 2)

print(len(contours), "objects were found in this image.")

cv2.imshow("Dilated image", dilate)

cv2.imshow("contours", image_copy)

cv2.waitKey(0)This time, after applying the Canny edge detector and before finding the contours on the image we apply the dilation operation to the binary image in order to add some pixels and increase the foreground objects. This will allow the contour detection algorithm to detect only the boundaries of the objects in the image.

Take a look at the image below to see the result after applying the dilation morphological operations:

This time the algorithm detected only the boundaries of the objects and if you check your terminal you’ll see the output «5 objects were found in this image». Great!